Goal:

Ever heard of Helm? Or Kubernetes? You have? … Are you lying? Nonetheless, below is how to use the tools to easily manage apps in Kubernetes.

Lessons Learned:

- Do it. Just do it. Install Helm.

- Helm has official installer script to grab latest version of Helm & install locally

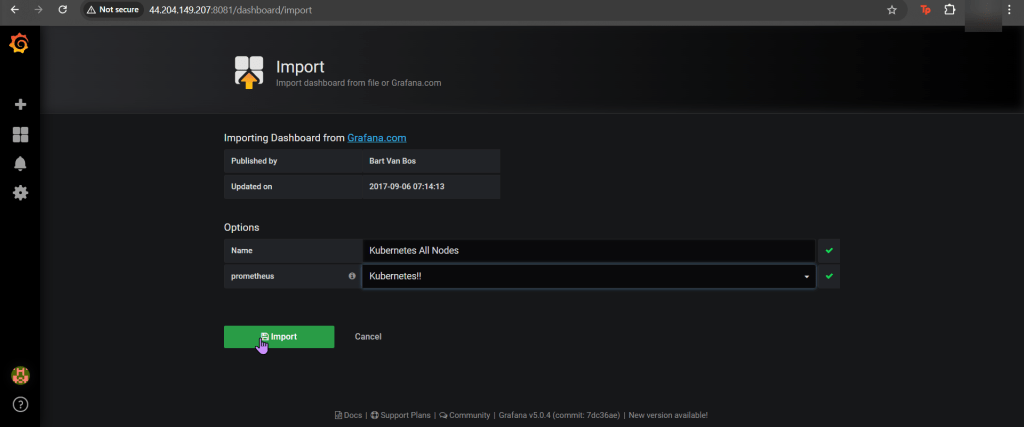

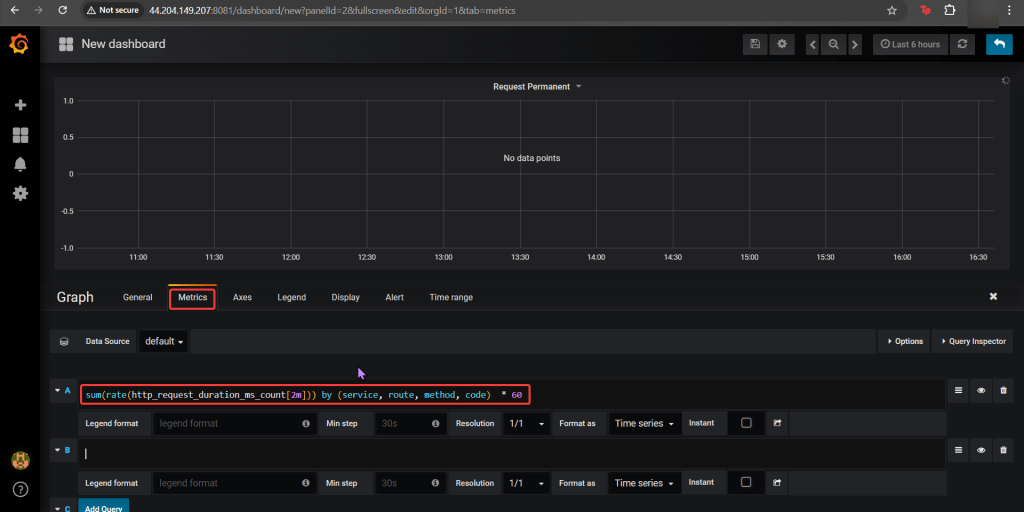

- Put the lime in the coconut…aka – Install a Helm Chart in the Cluster

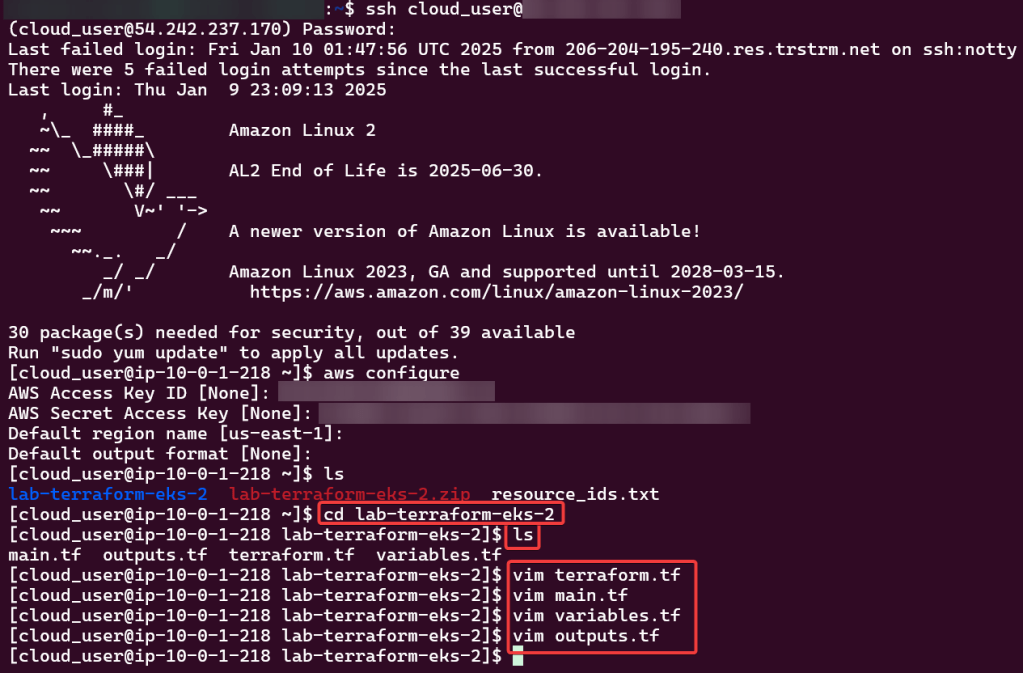

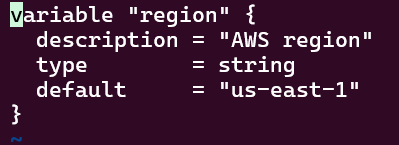

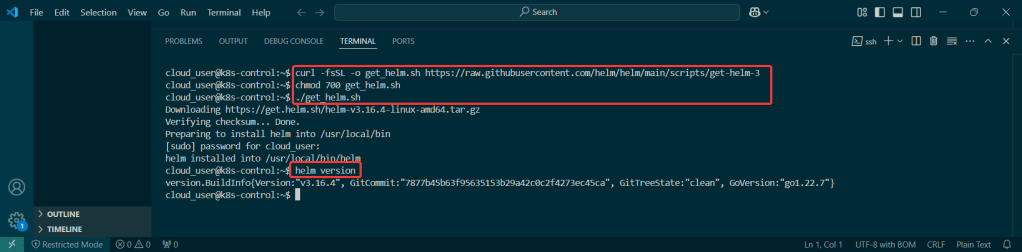

Do it. Just do it. Install Helm:

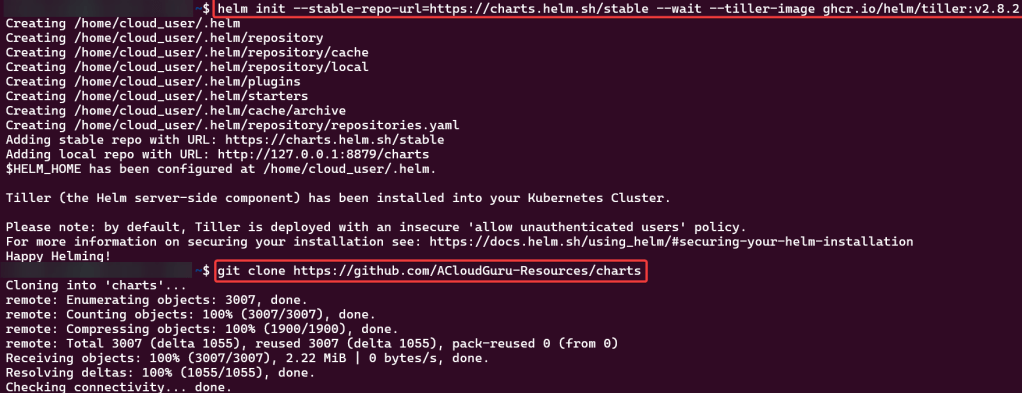

Curl & chmod to get Helm:

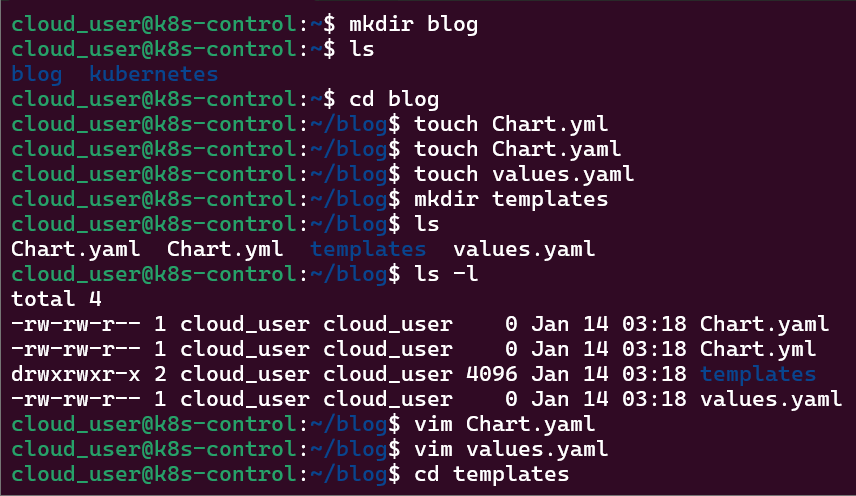

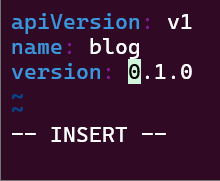

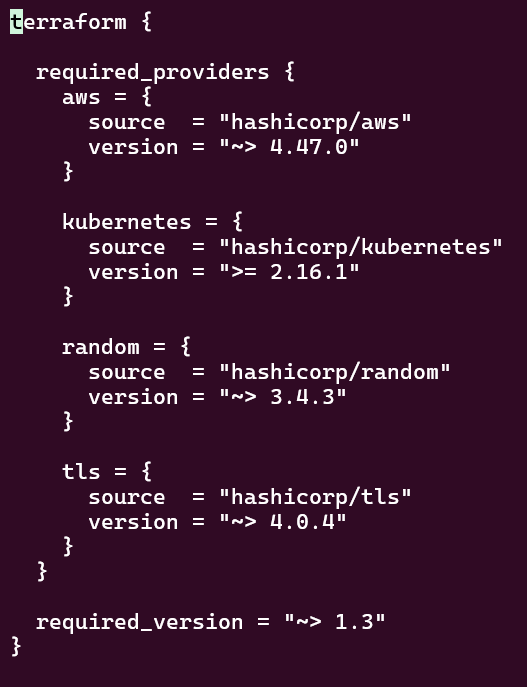

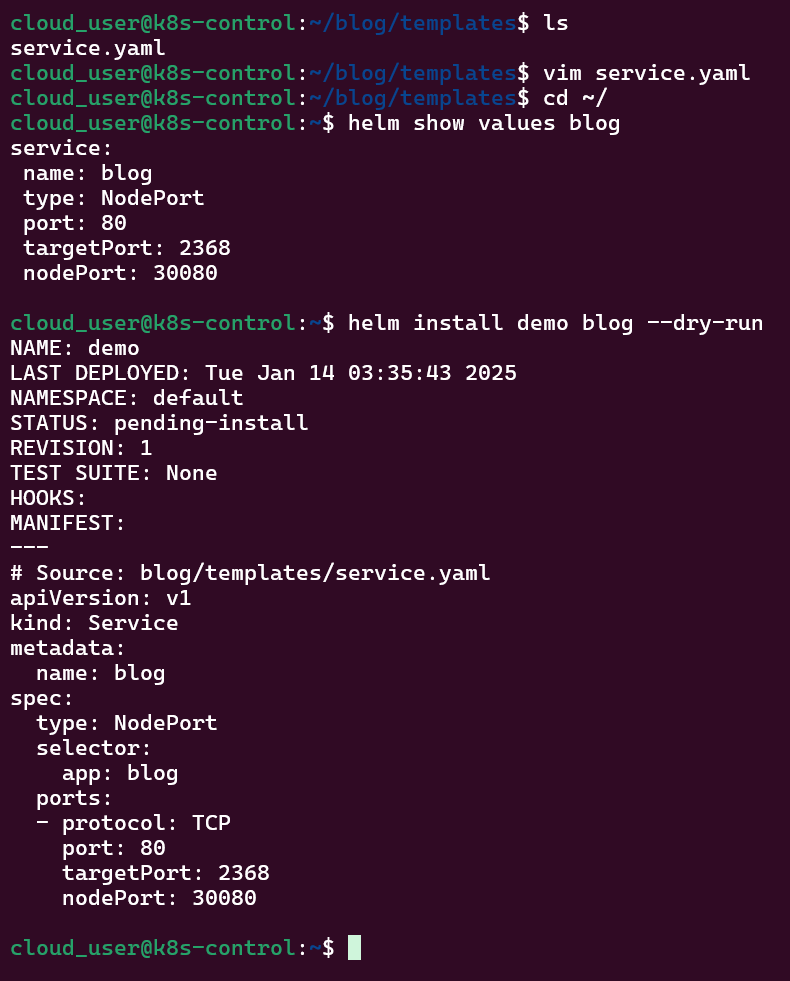

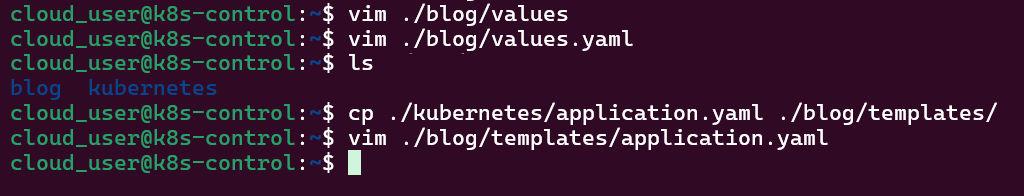

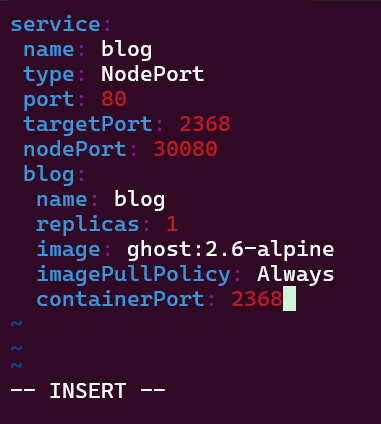

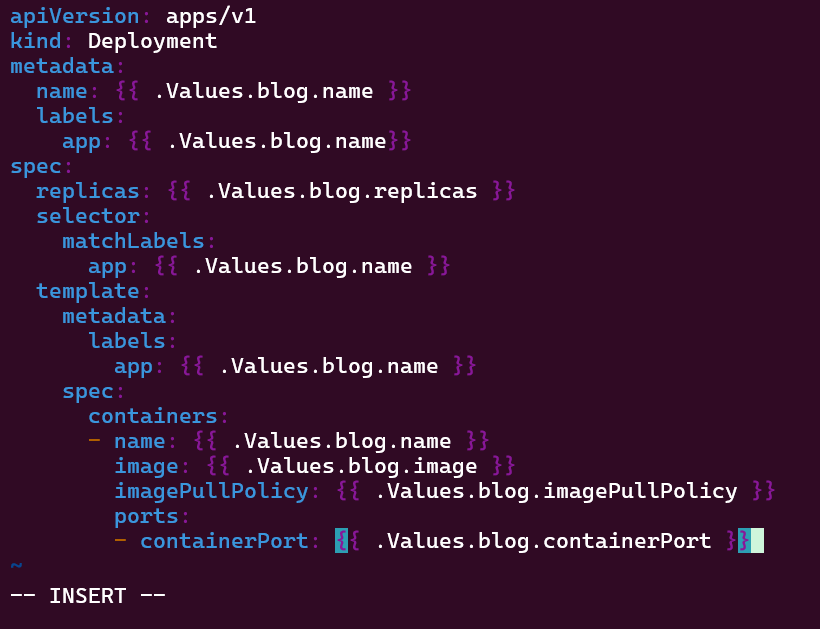

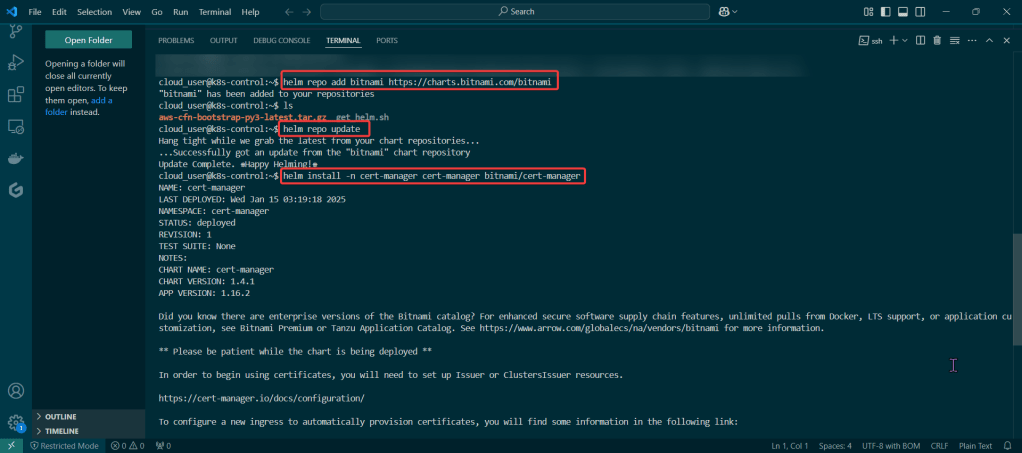

Put the lime in the coconut…aka – Install a Helm Chart in the Cluster:

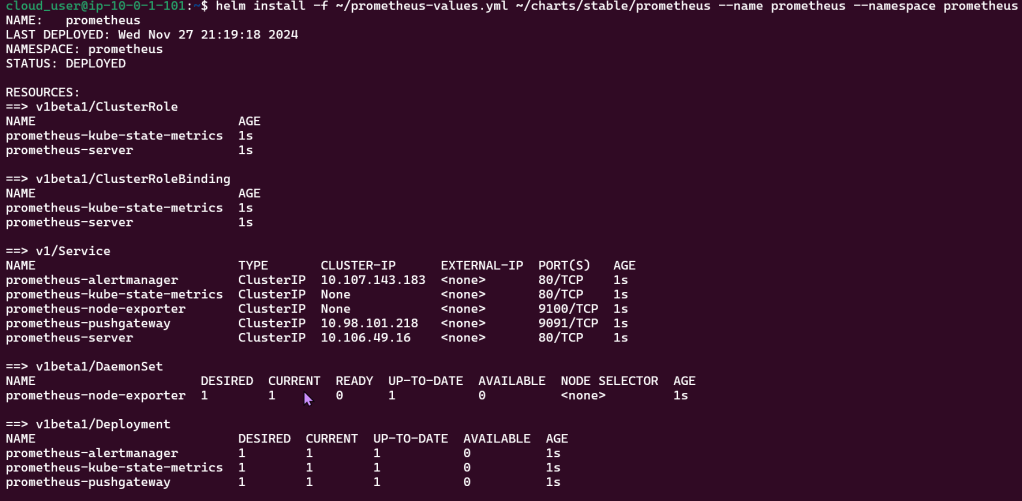

Add Bitnami, update chart listing, & install cert-manager namespace:

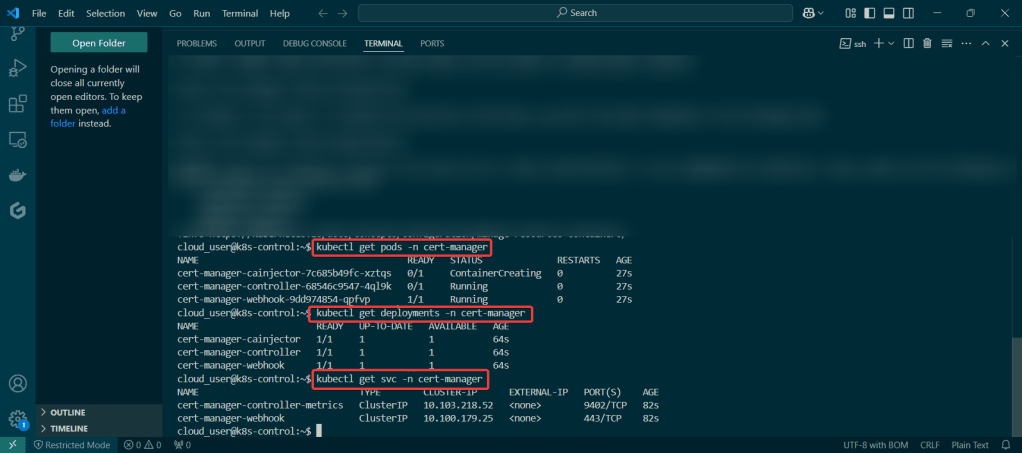

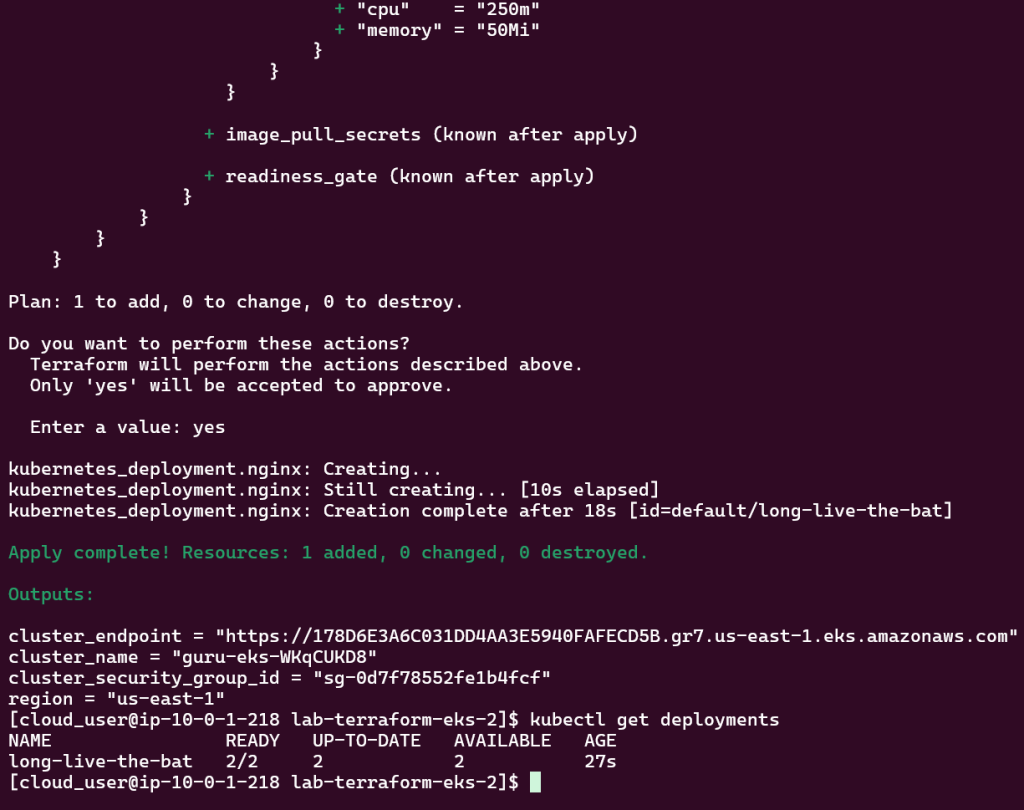

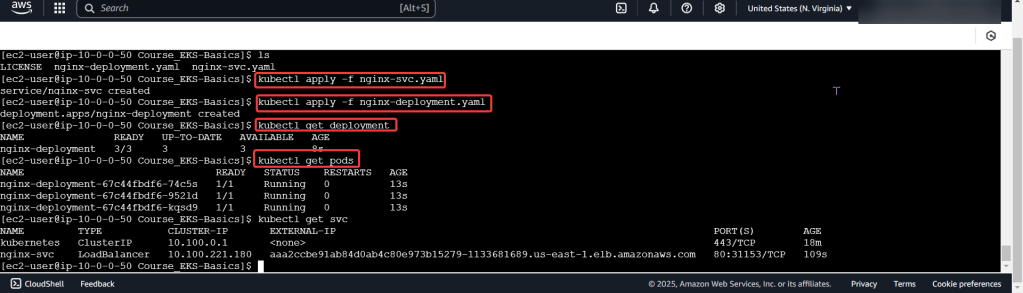

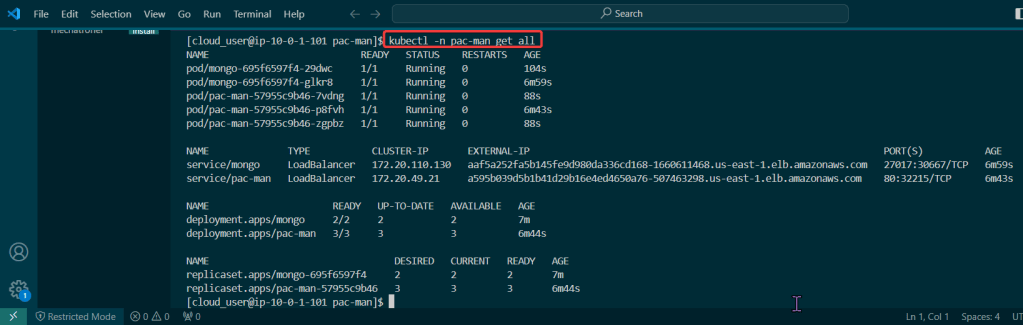

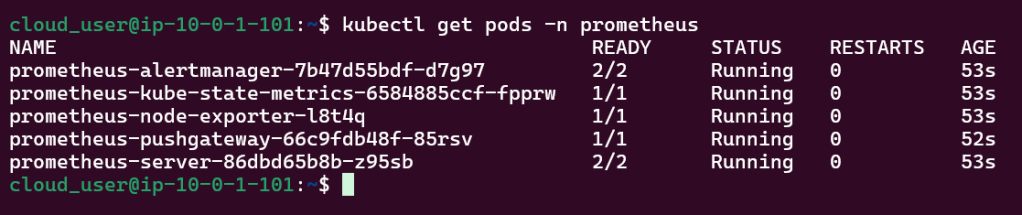

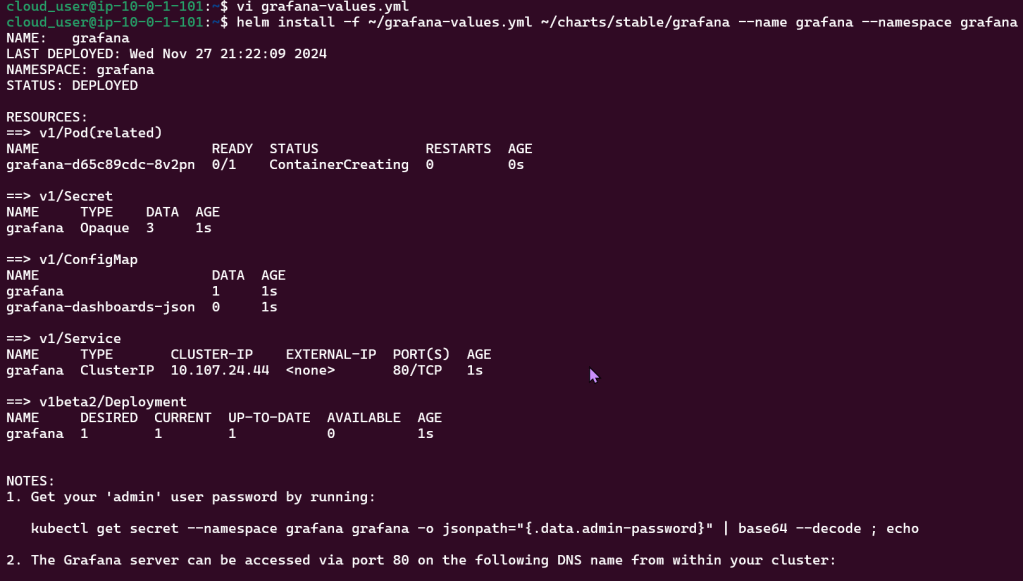

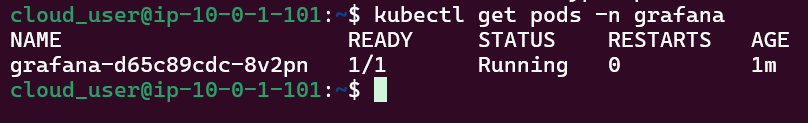

View pods, deployment, & services created by installing Helm: