Goal:

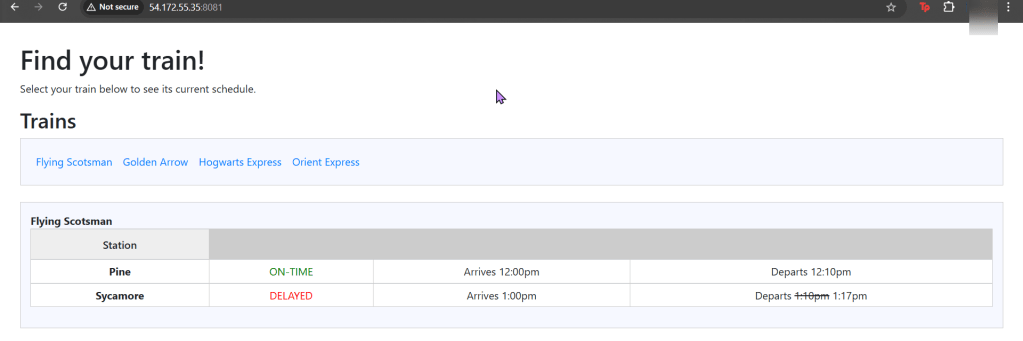

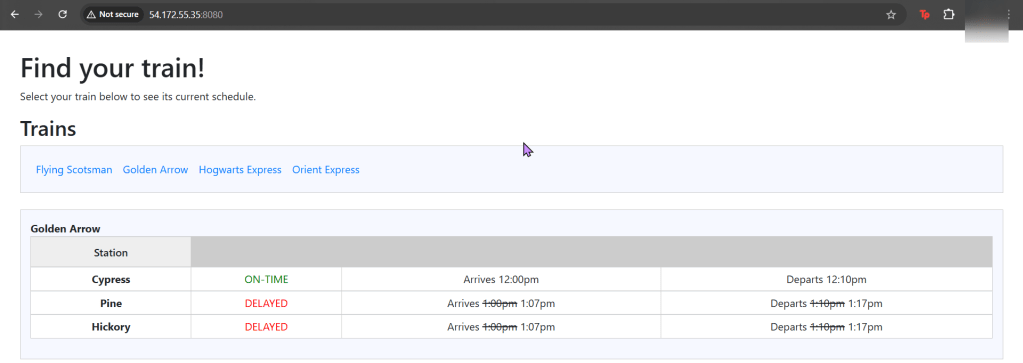

Our coal mine (CICD pipeline) is struggling, so lets use canary deployments to monitor a Kubernetes cluster under a Jenkins pipeline. Alright, lets level set here…

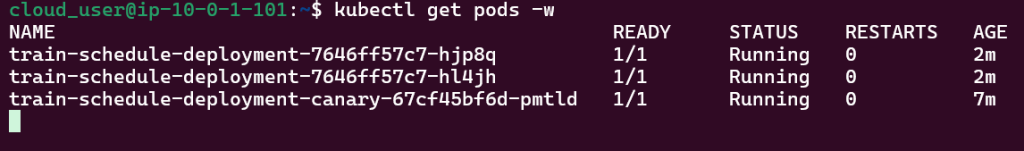

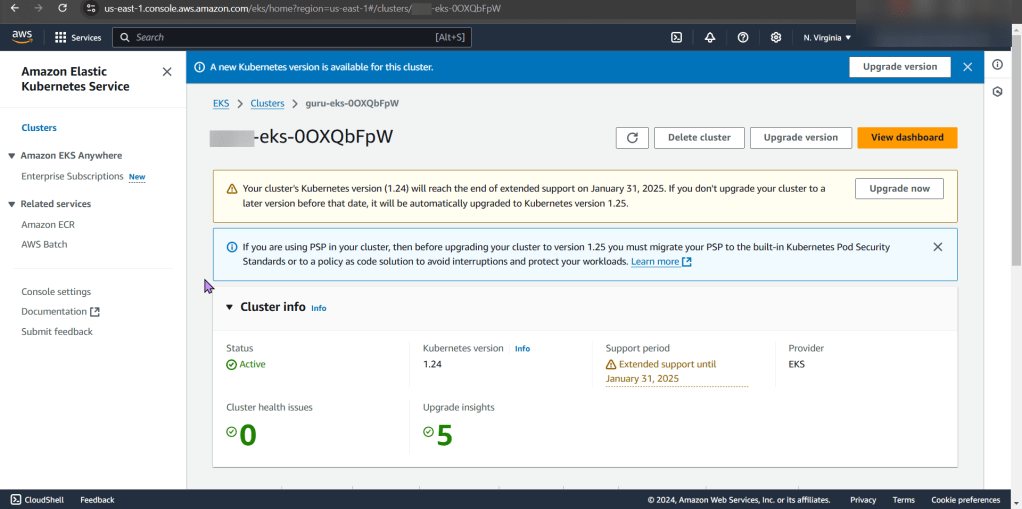

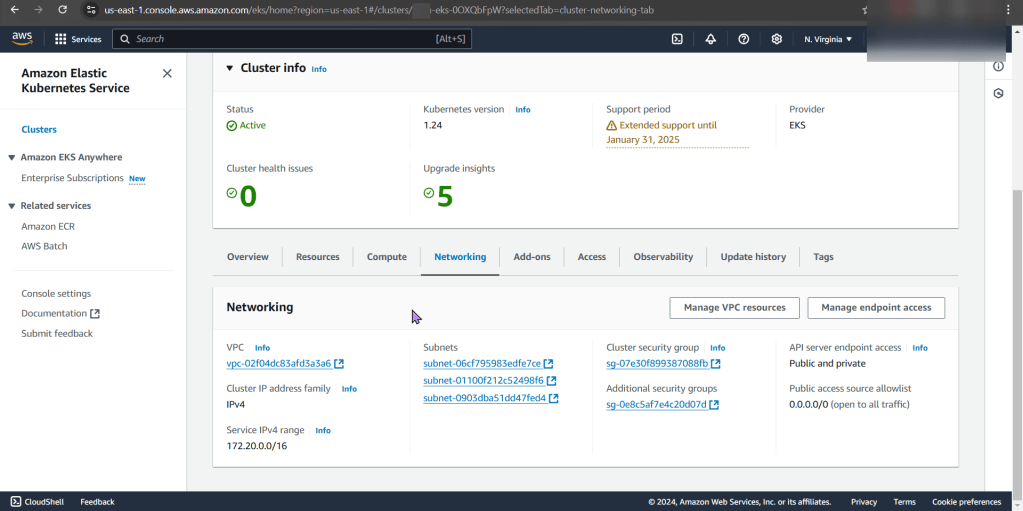

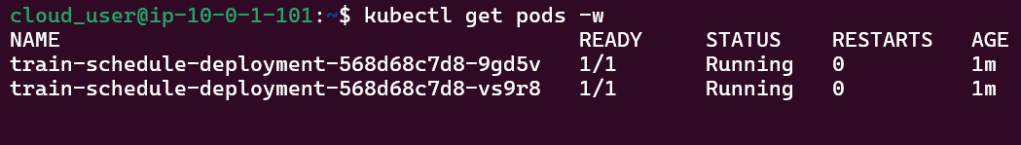

- You got a Kubernetes cluster, mmmmkay?

- A pipeline from Jenkins leads to CICD deployments, yeah?

- Now we must add the deetz (details) to get canary to deploy

Lessons Learned:

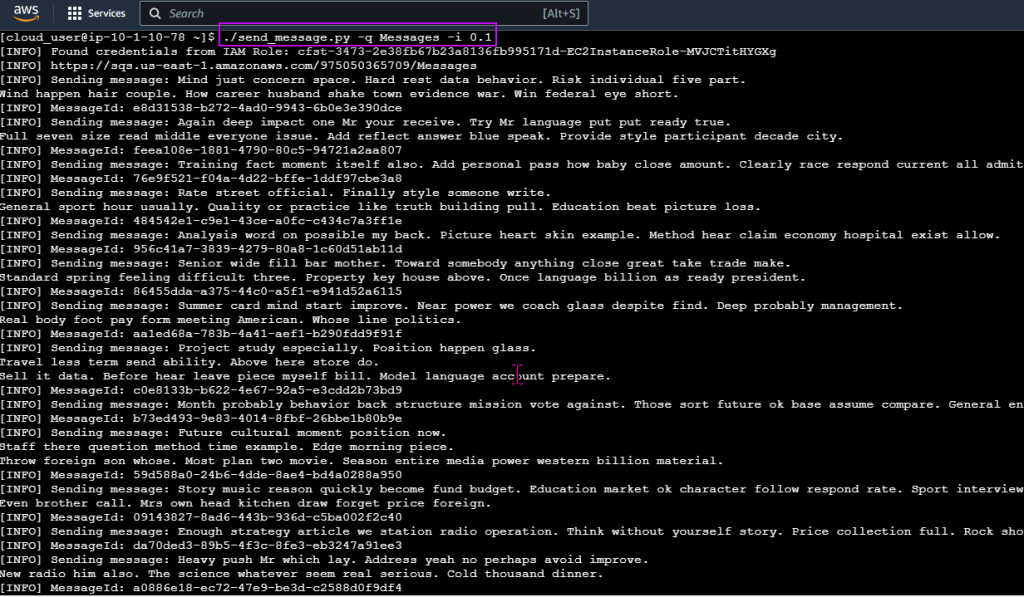

- Run Deployment in Jenkins

- Add Canary to Pipeline to run Deployment

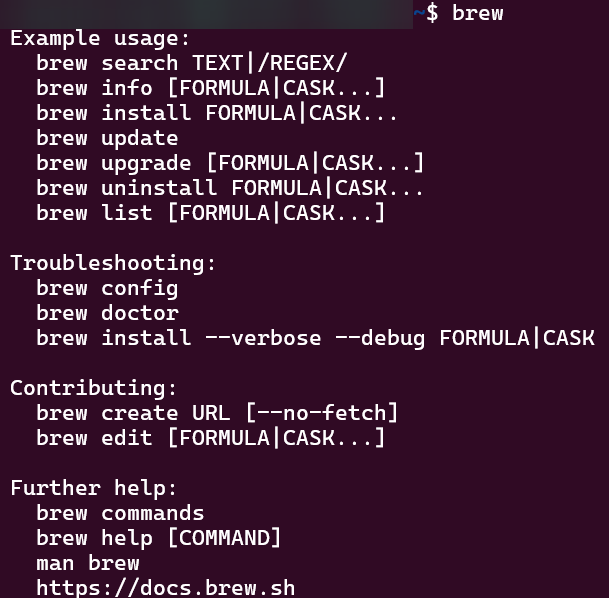

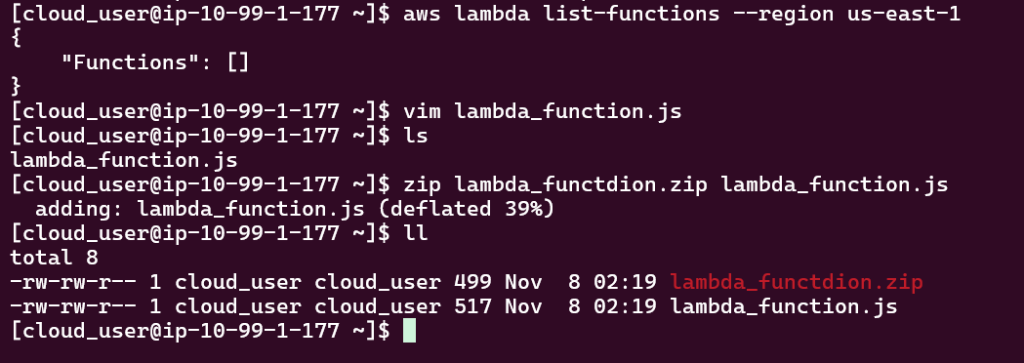

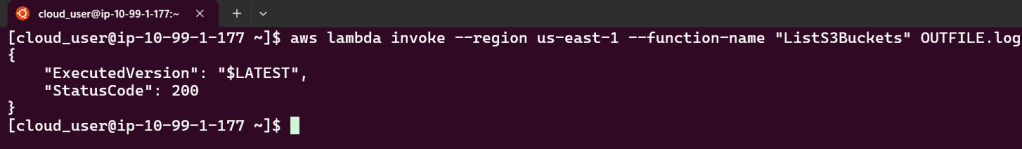

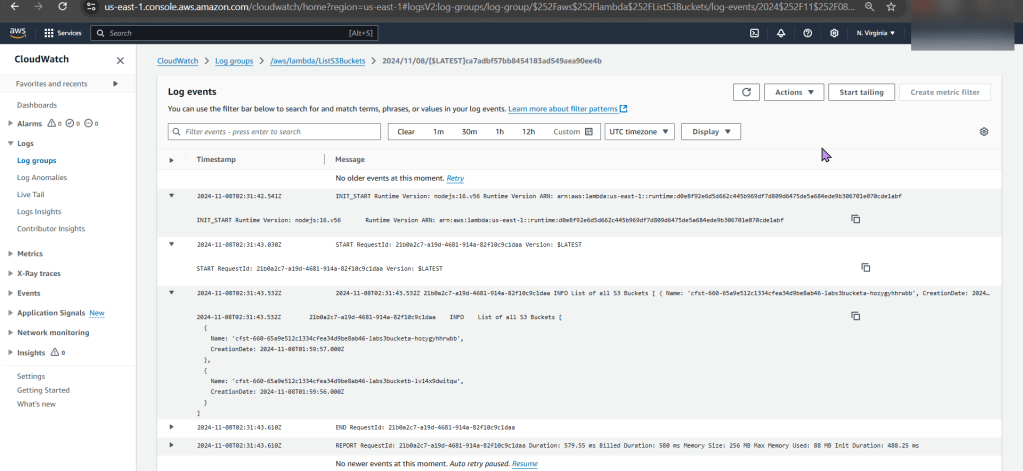

Run Deployment in Jenkins:

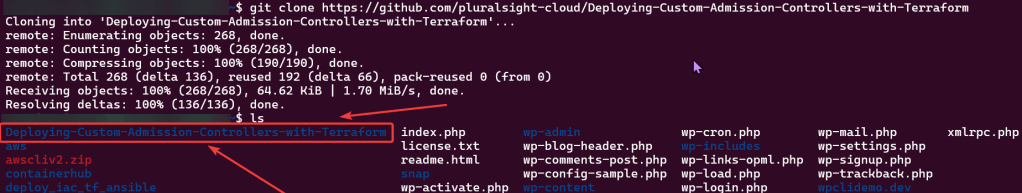

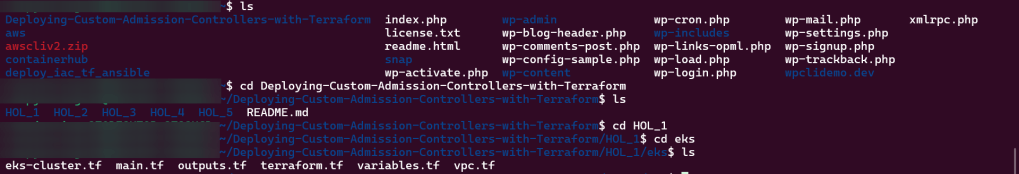

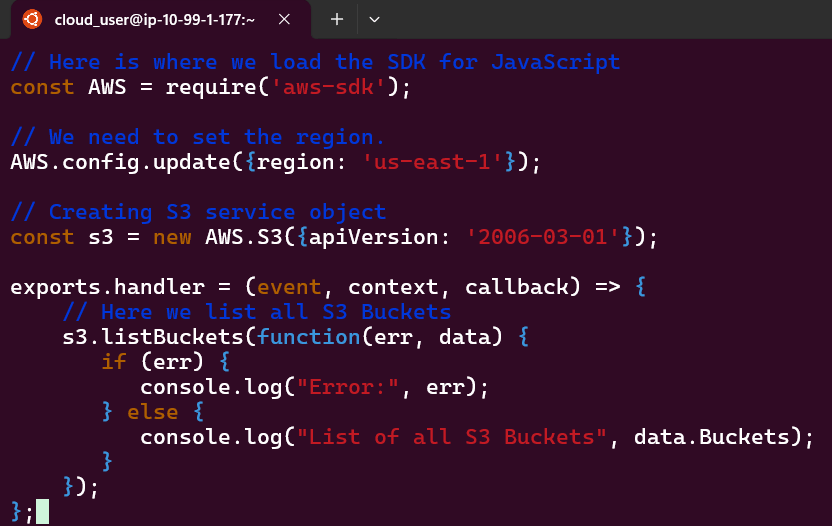

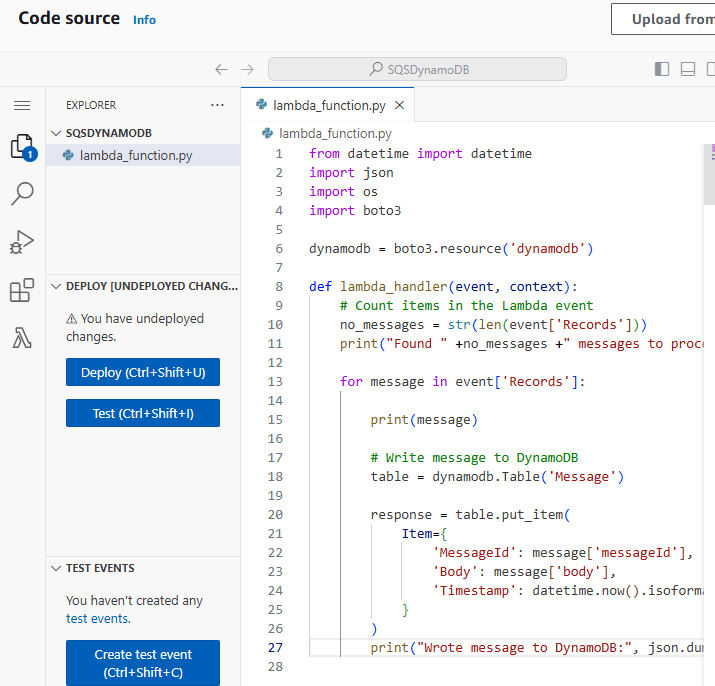

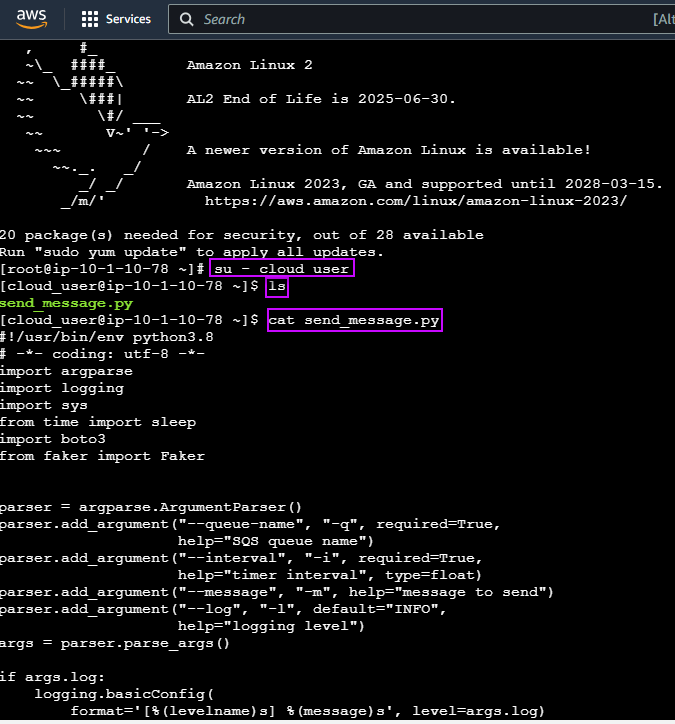

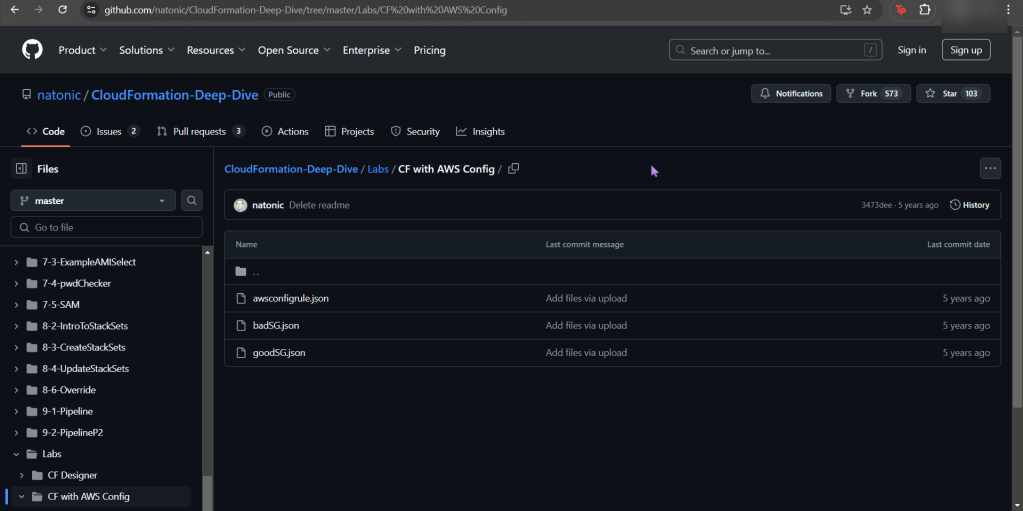

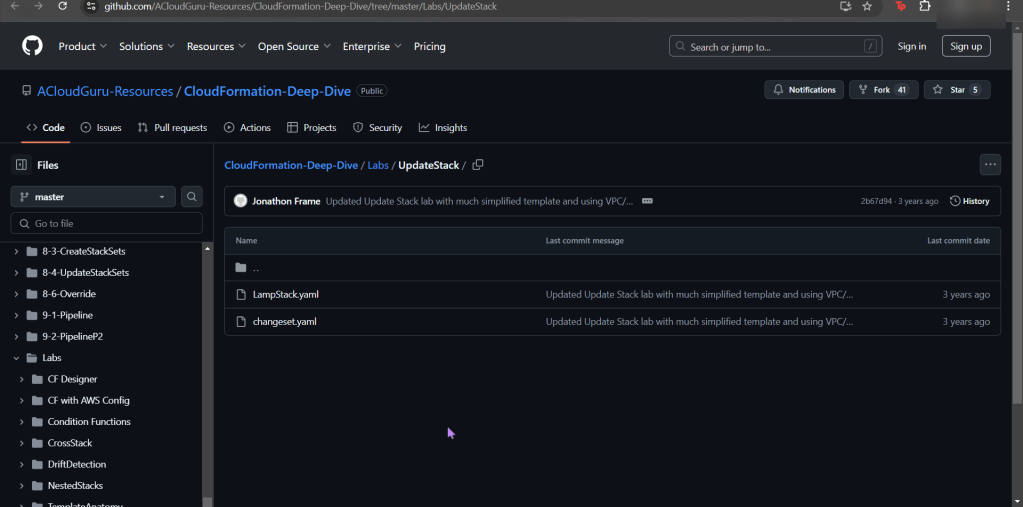

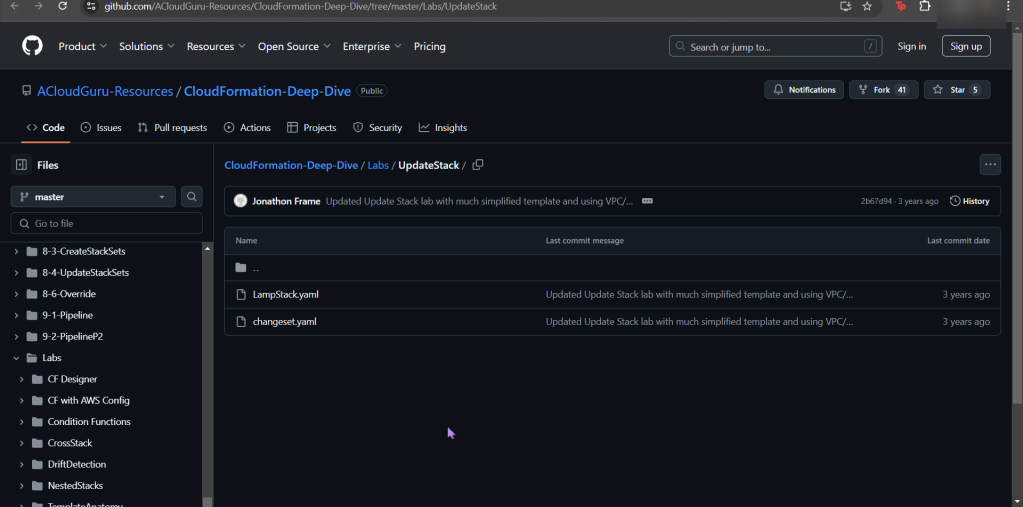

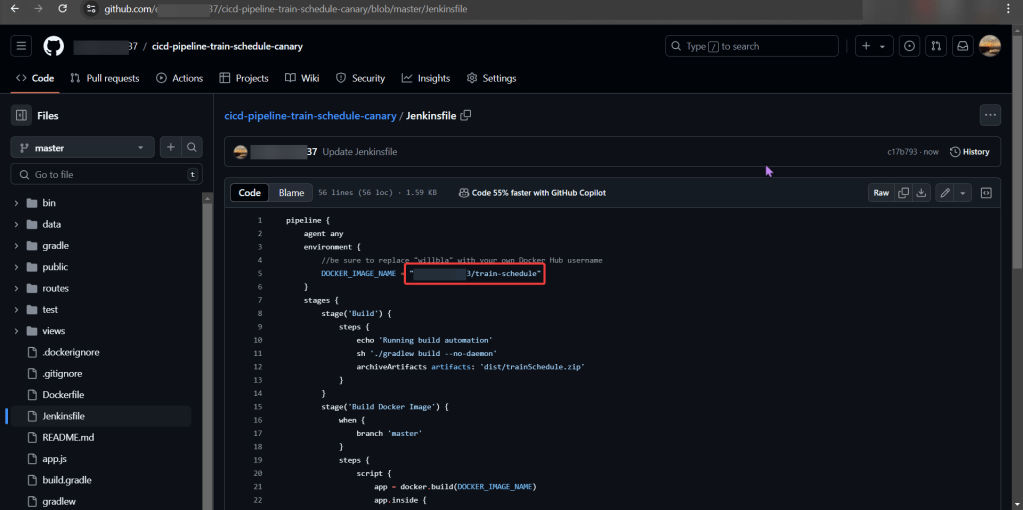

Source Code:

- Create fork & update username

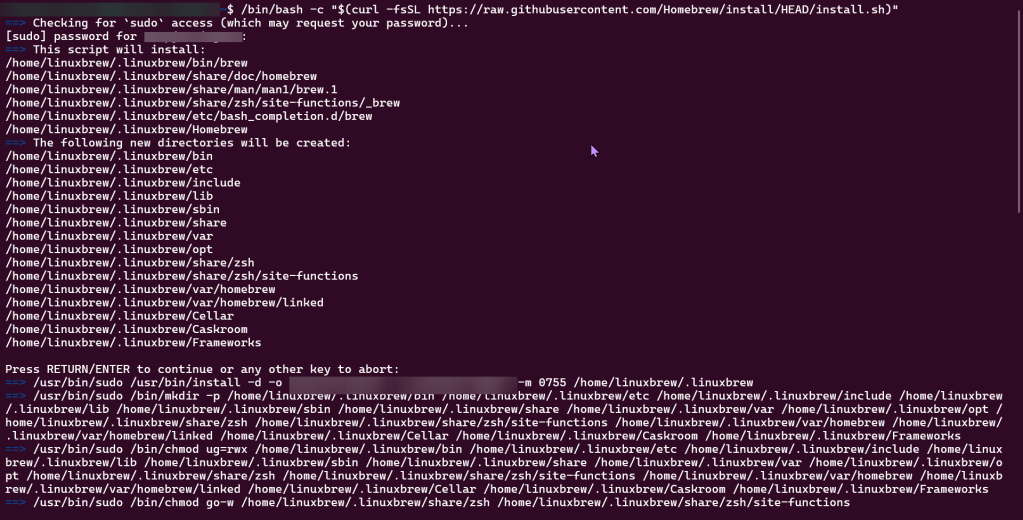

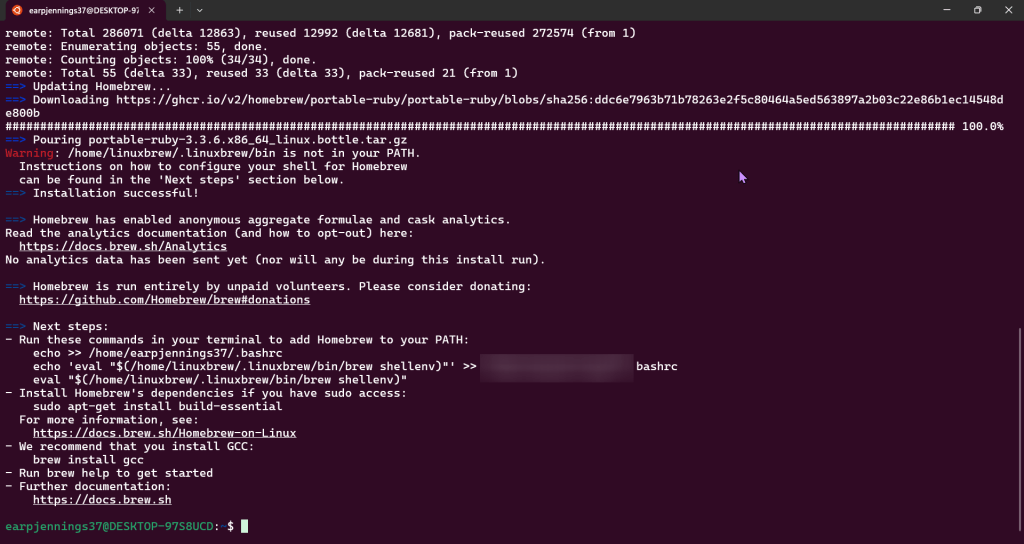

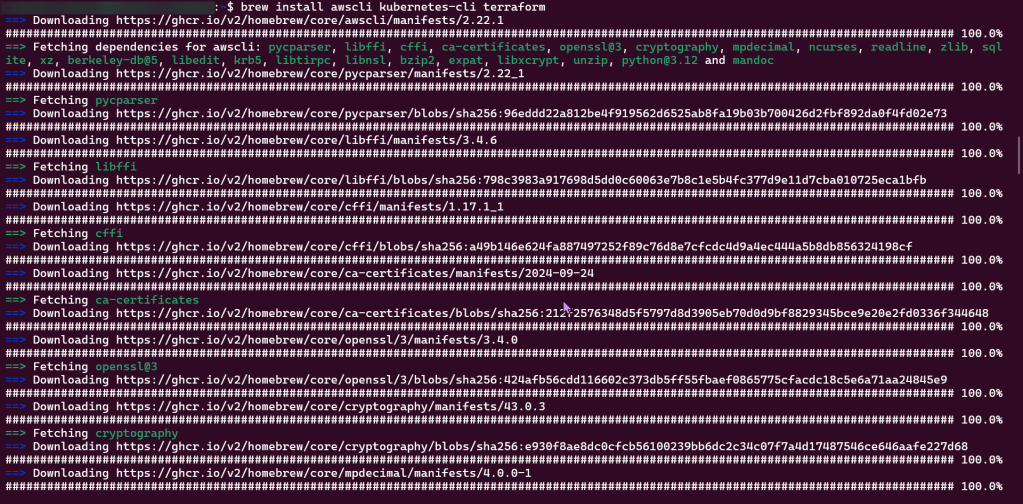

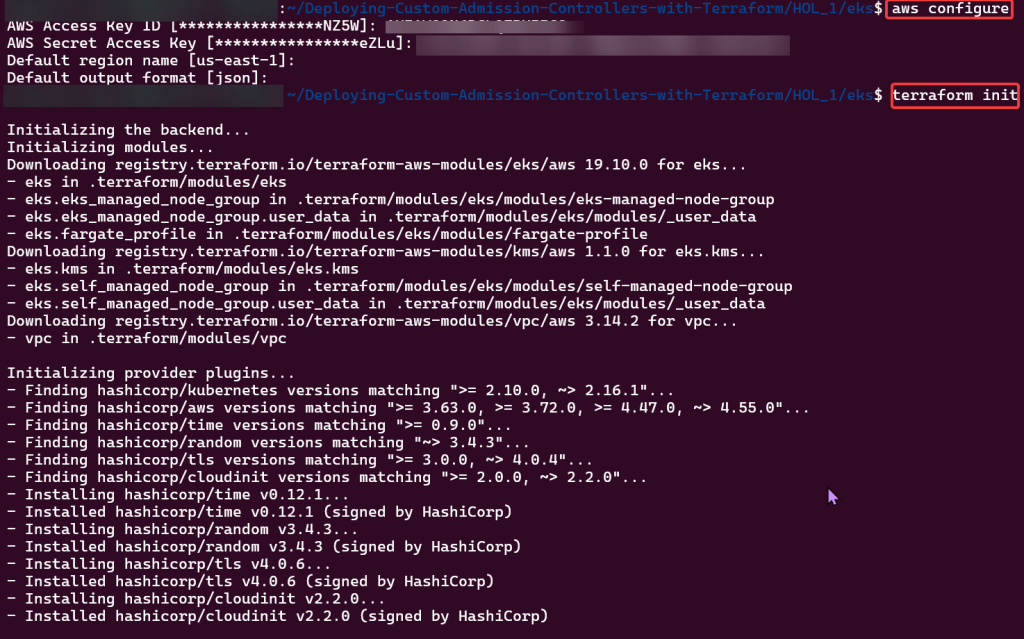

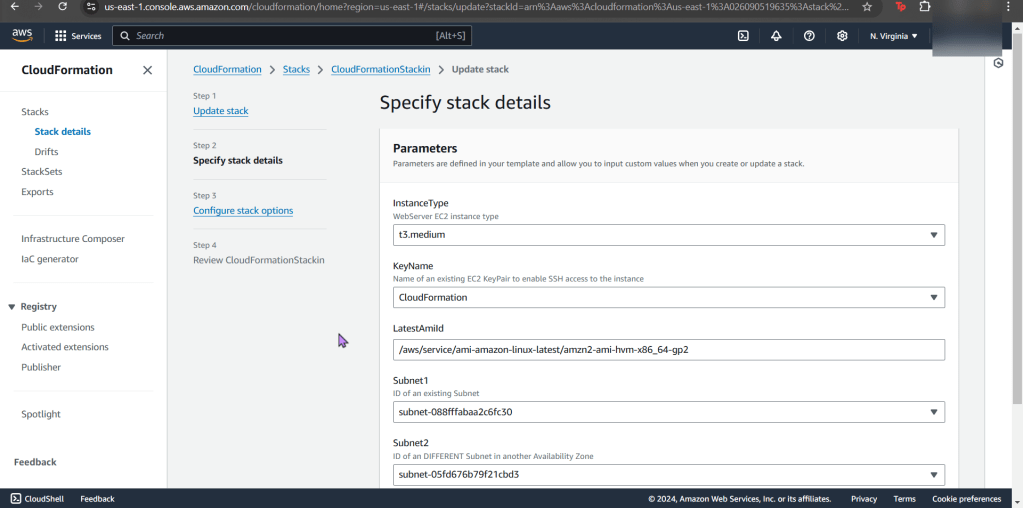

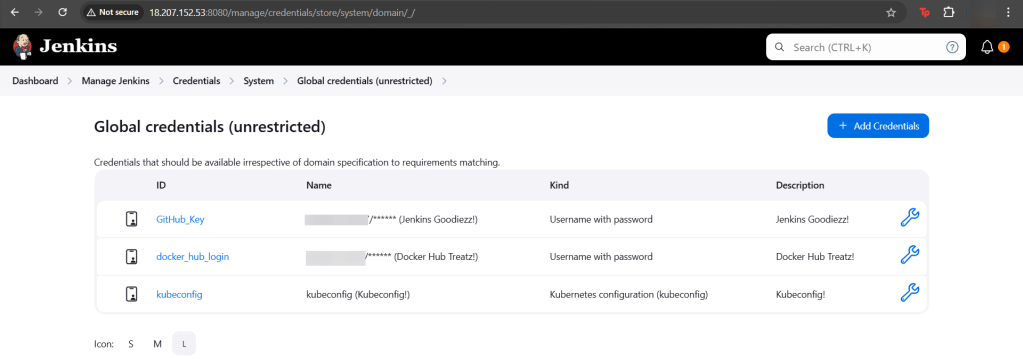

Setup Jenkins (Github access token, Docker Hub, & KubeConfig):

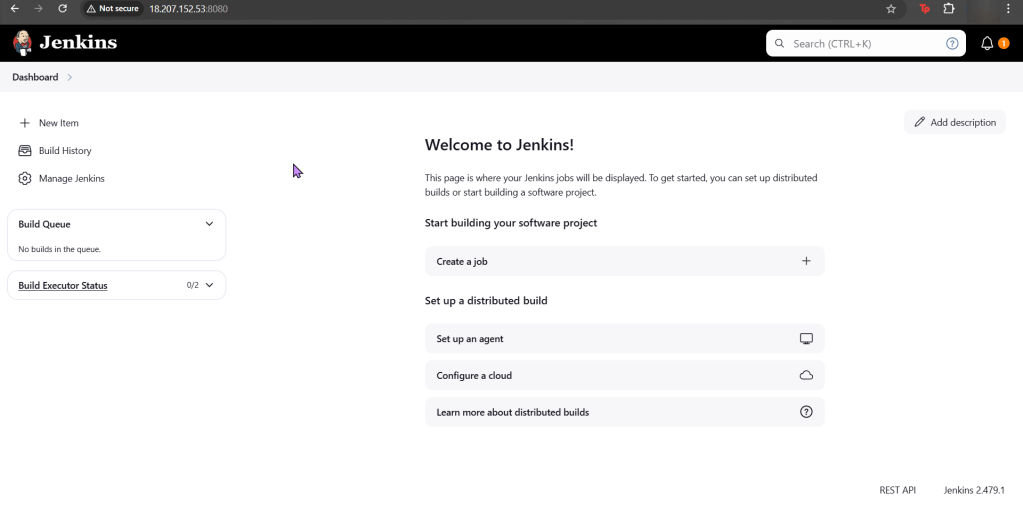

Jenkins:

- Credz

- Github user name & password (Access token)

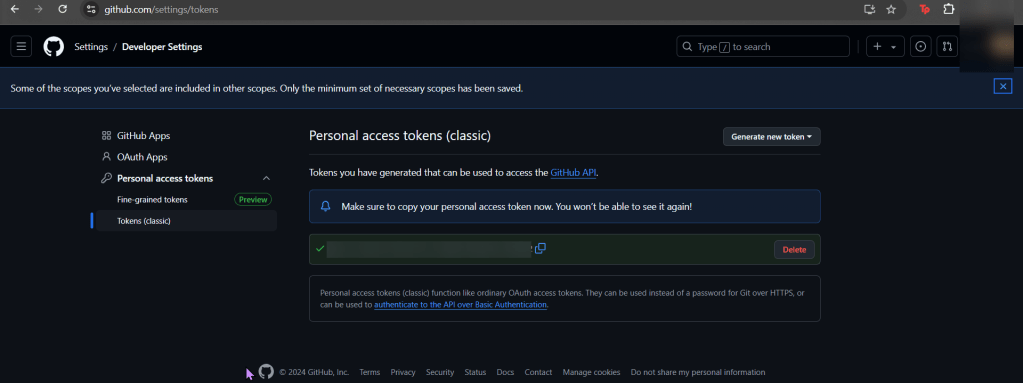

Github:

- Generate access token

DockerHub:

- DockerHub does not generate access tokens

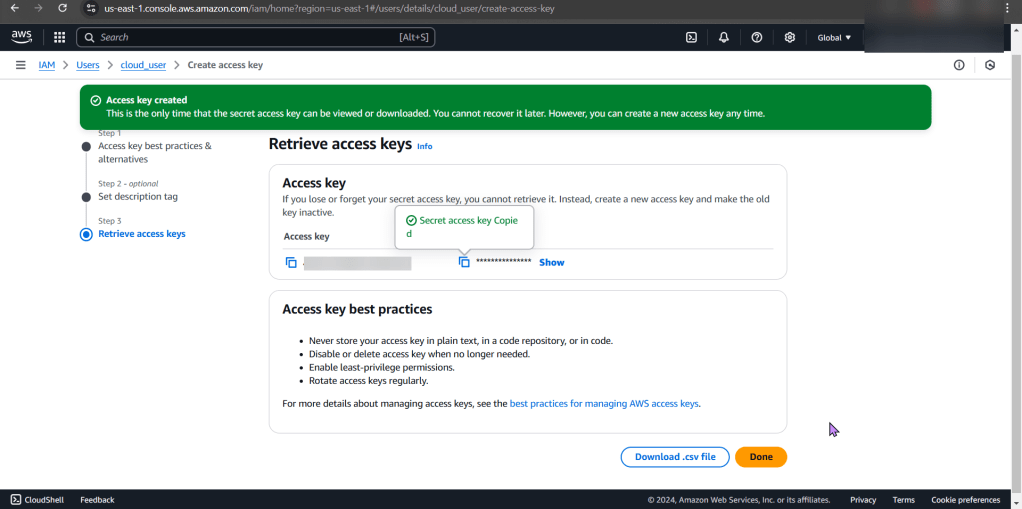

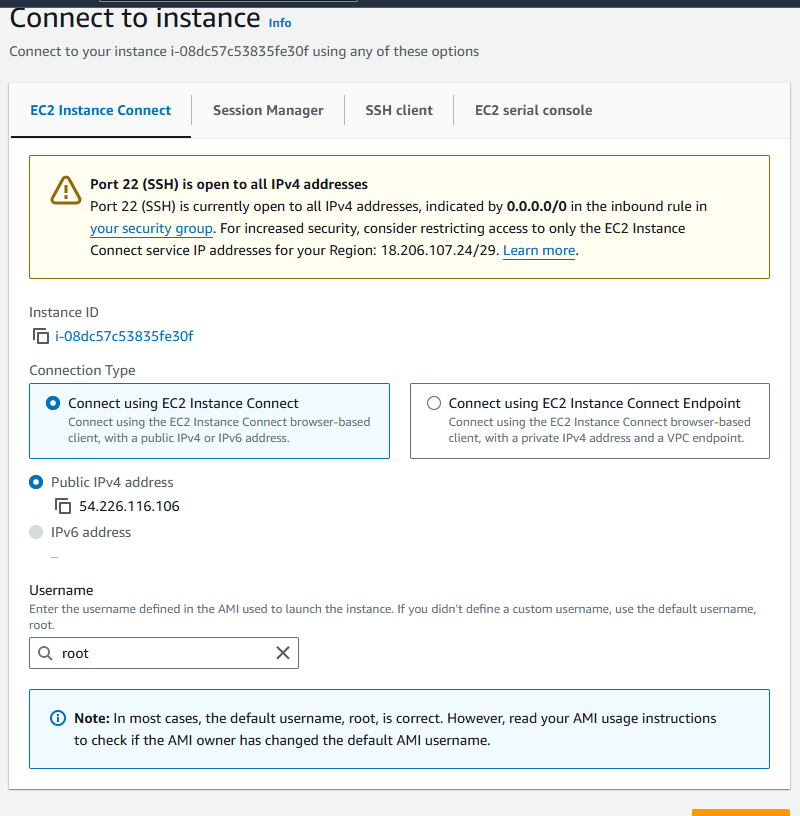

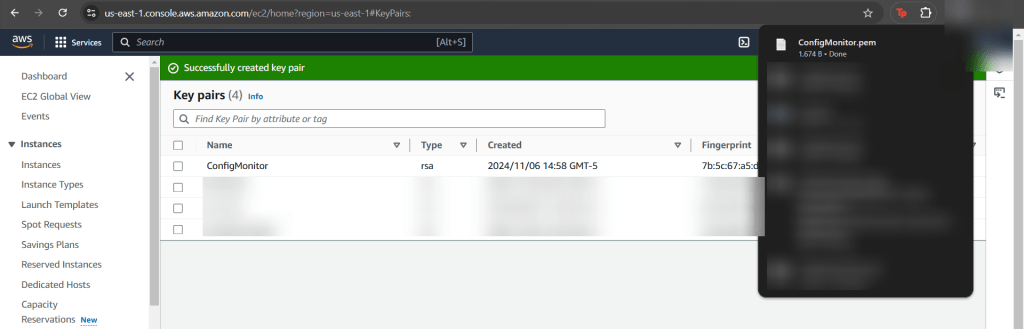

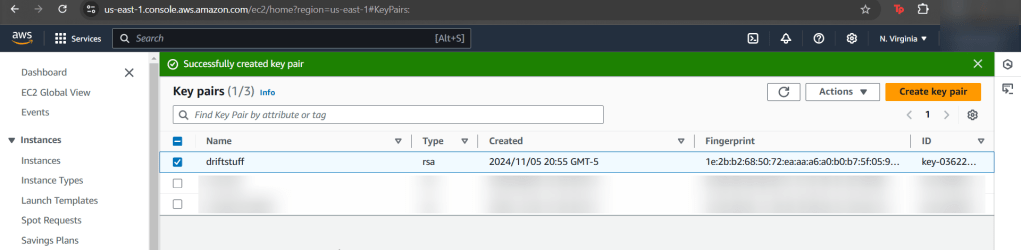

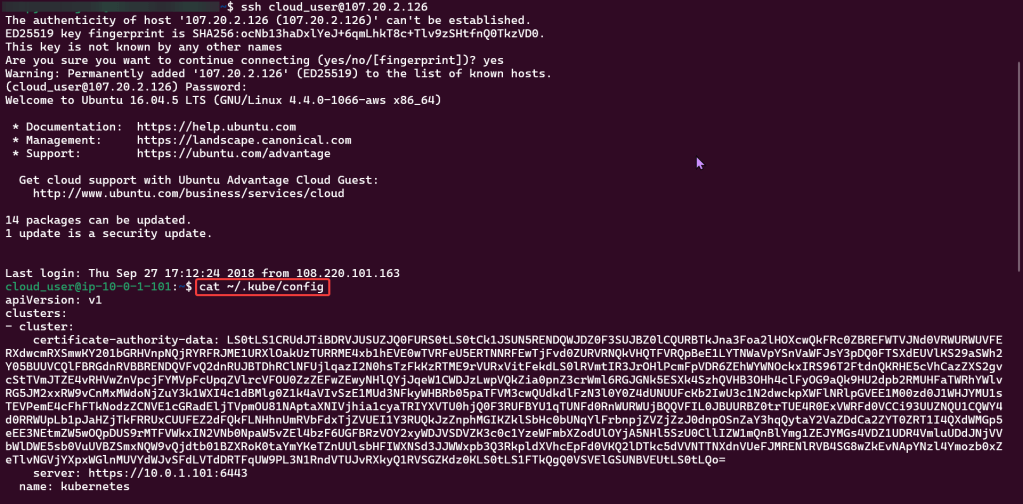

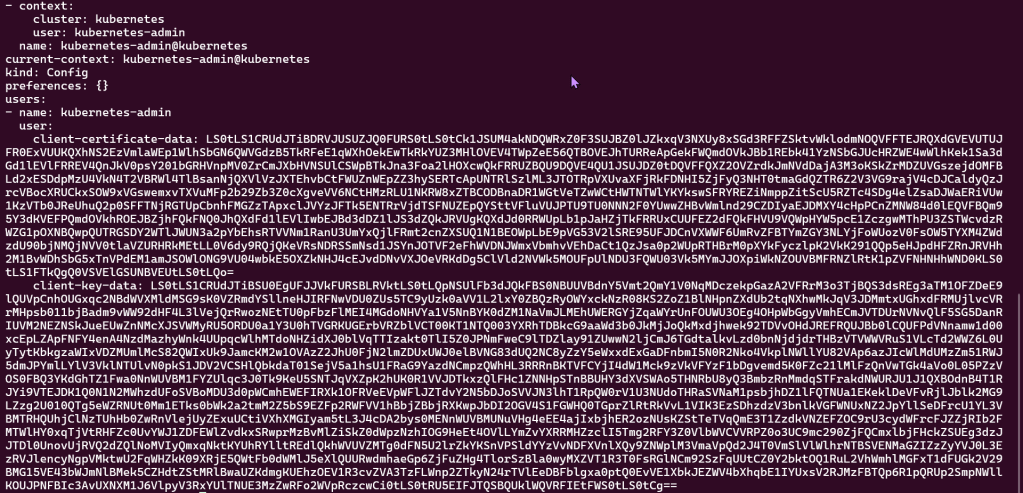

Kubernetes:

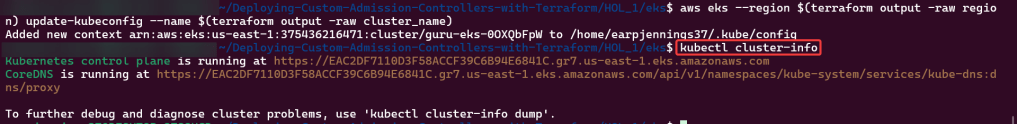

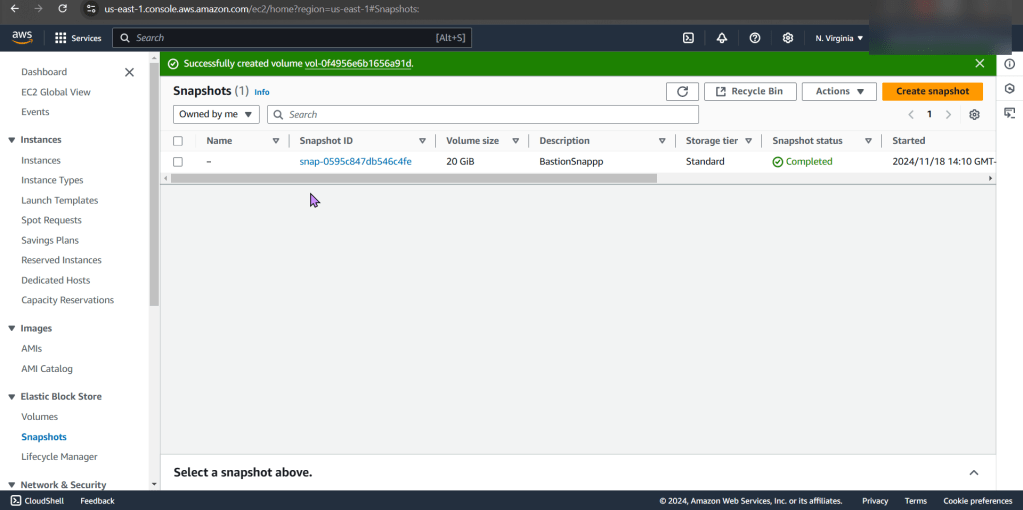

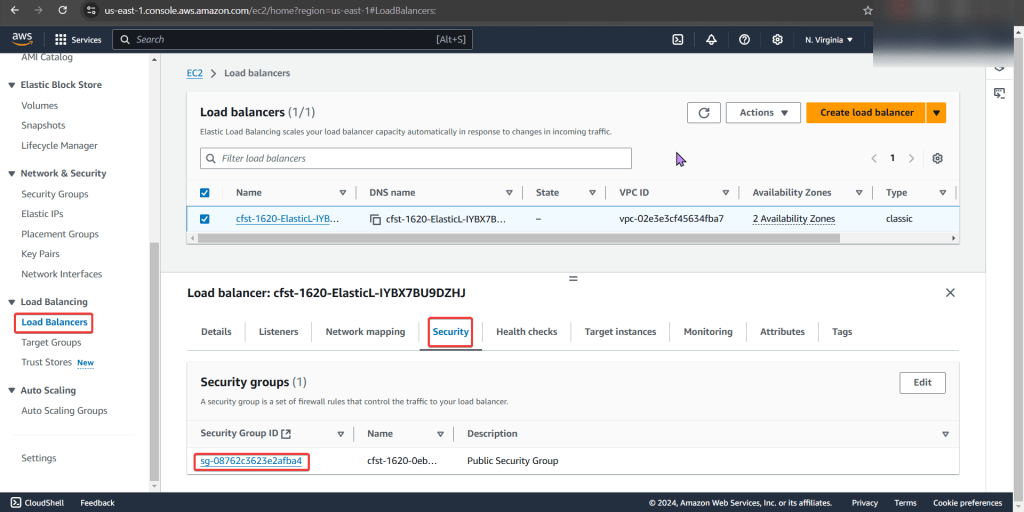

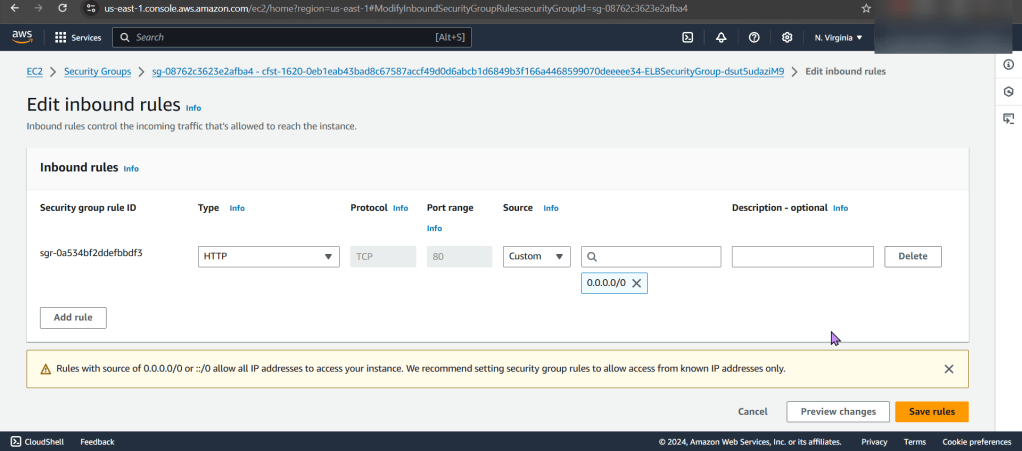

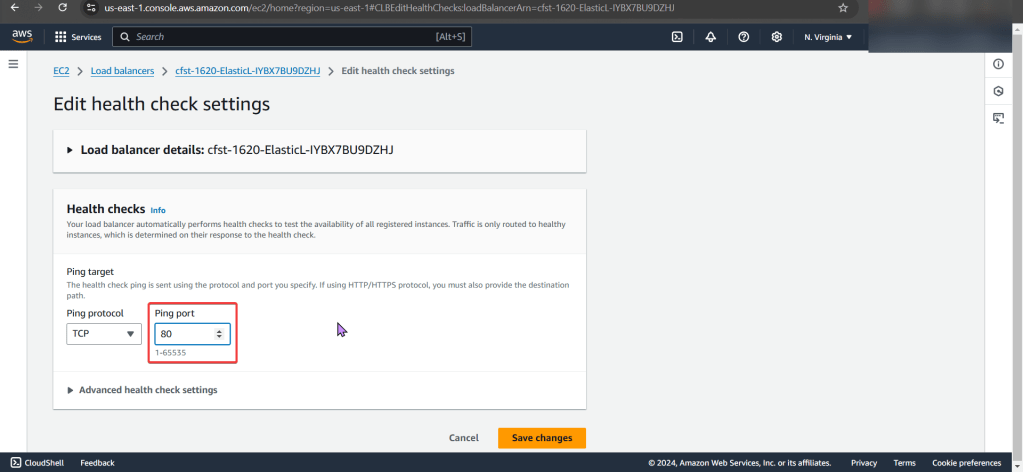

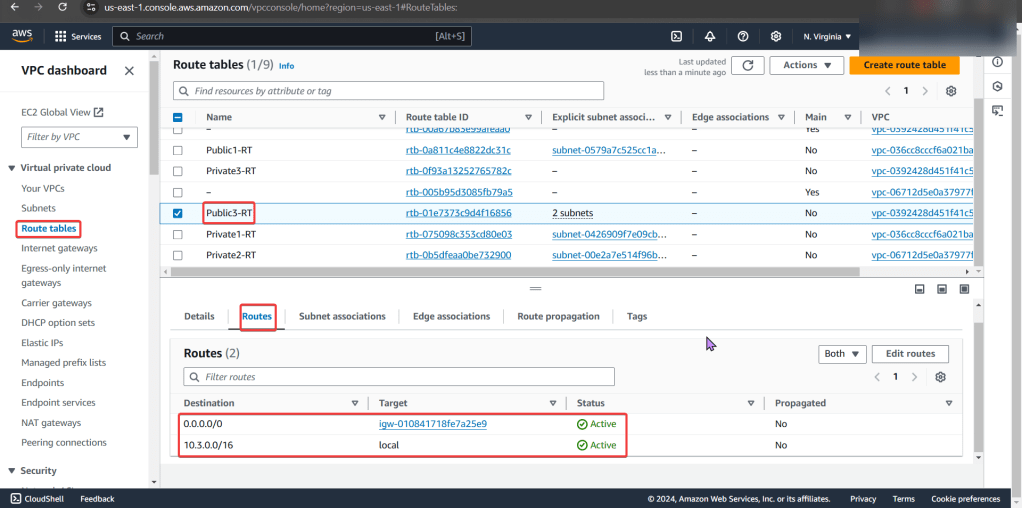

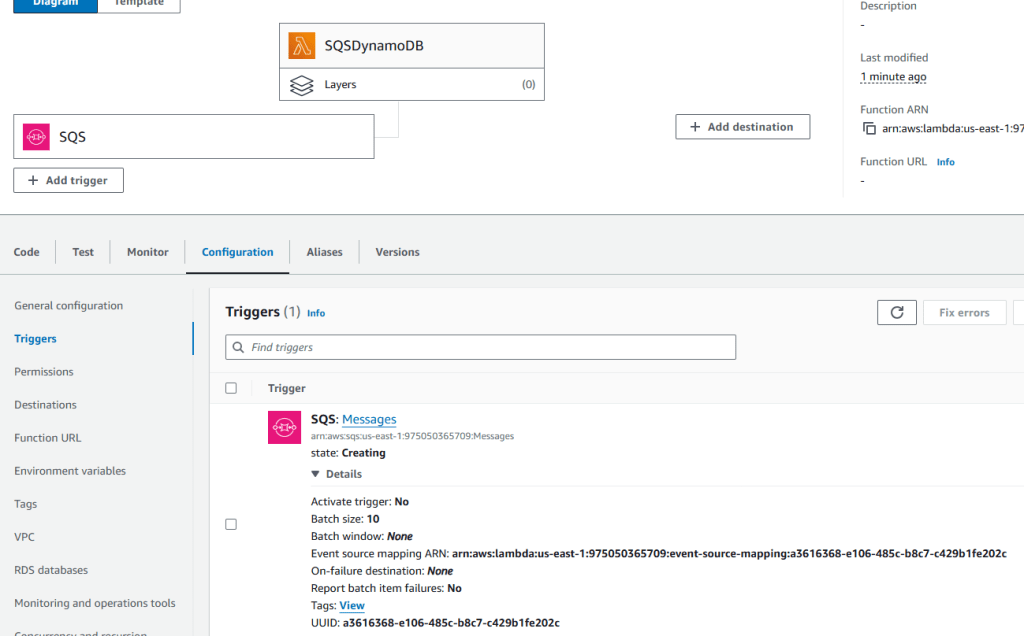

Add Canary to Pipeline to run Deployment:

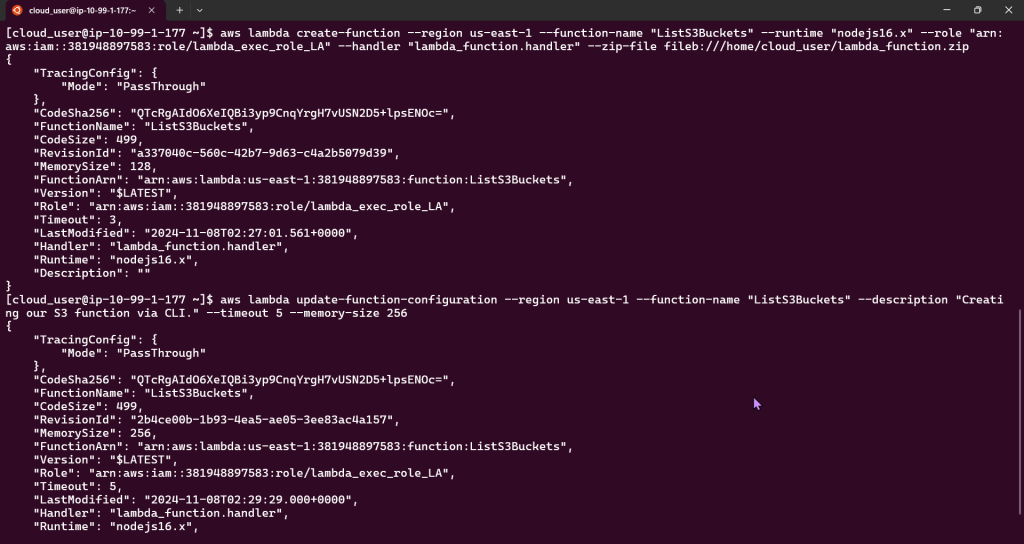

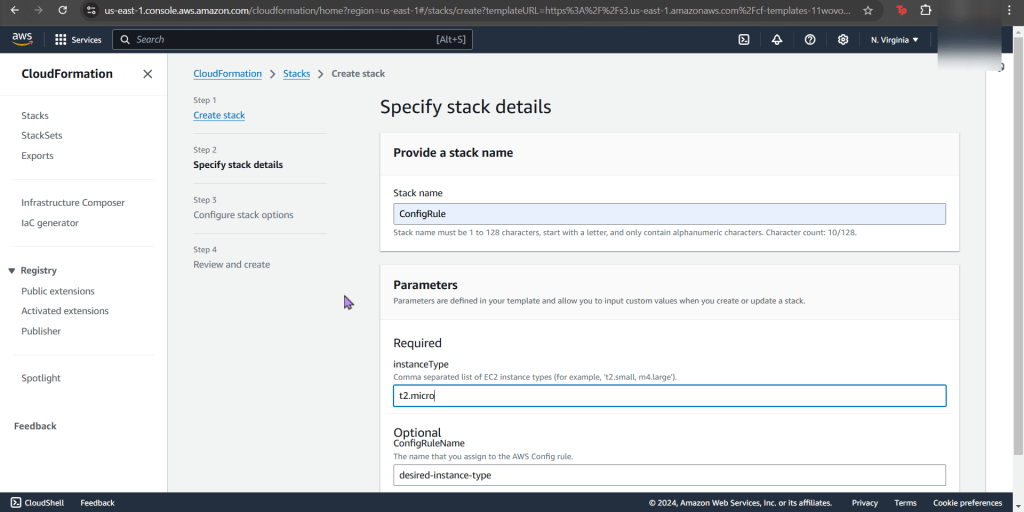

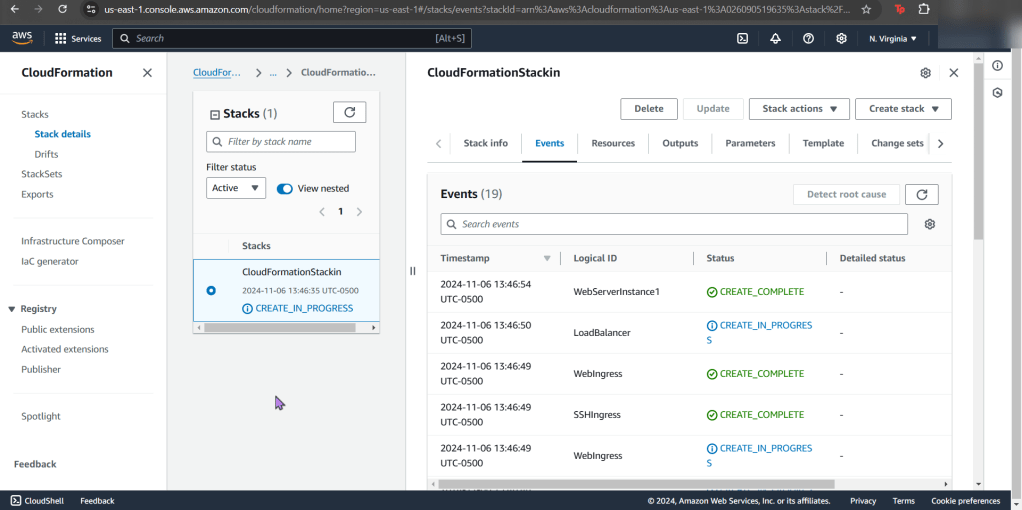

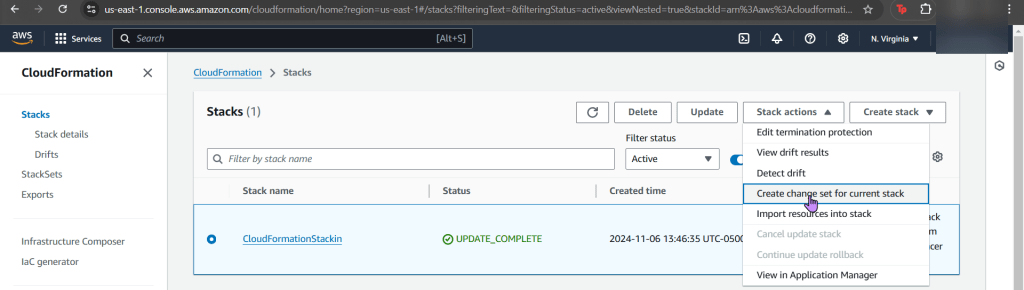

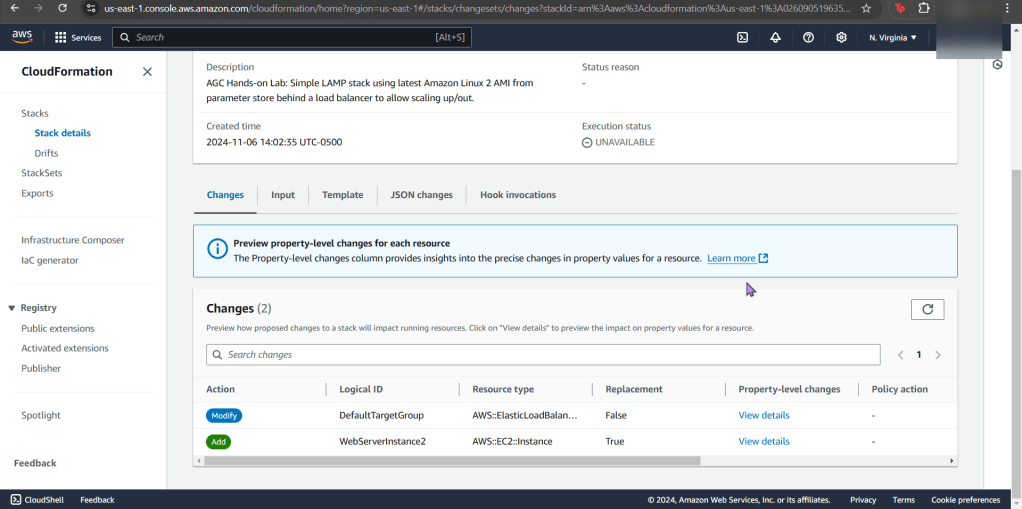

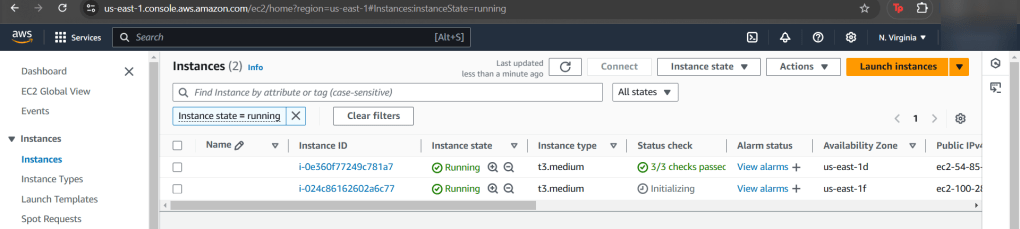

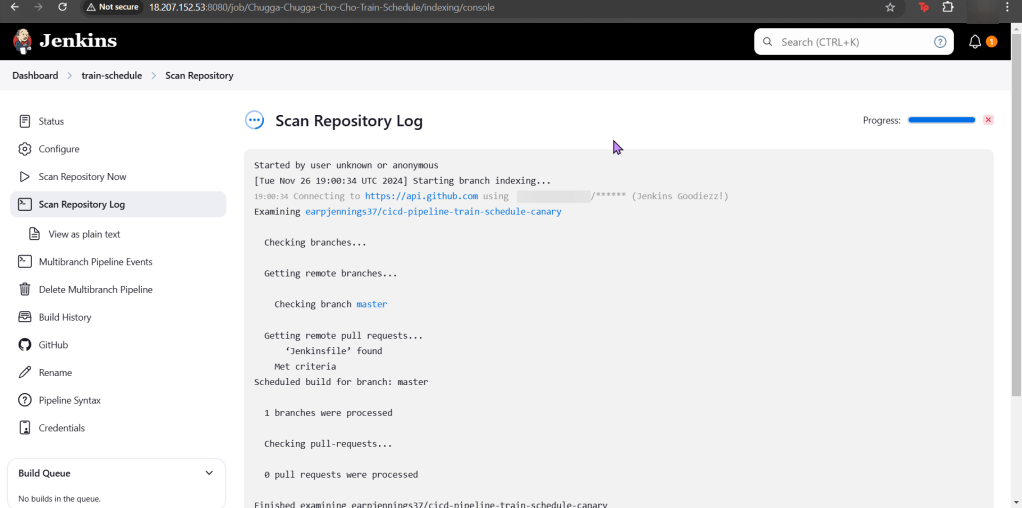

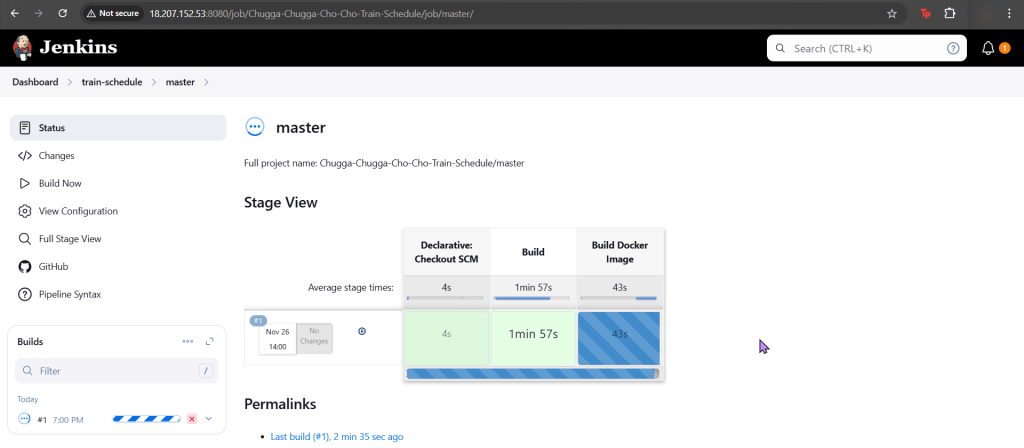

Create Jenkins Project:

- Multi-Branch Pipeline

- Github username

- Owner & forked repository

- Provided an option for URL, select deprecated visualization

- Check it out homie!

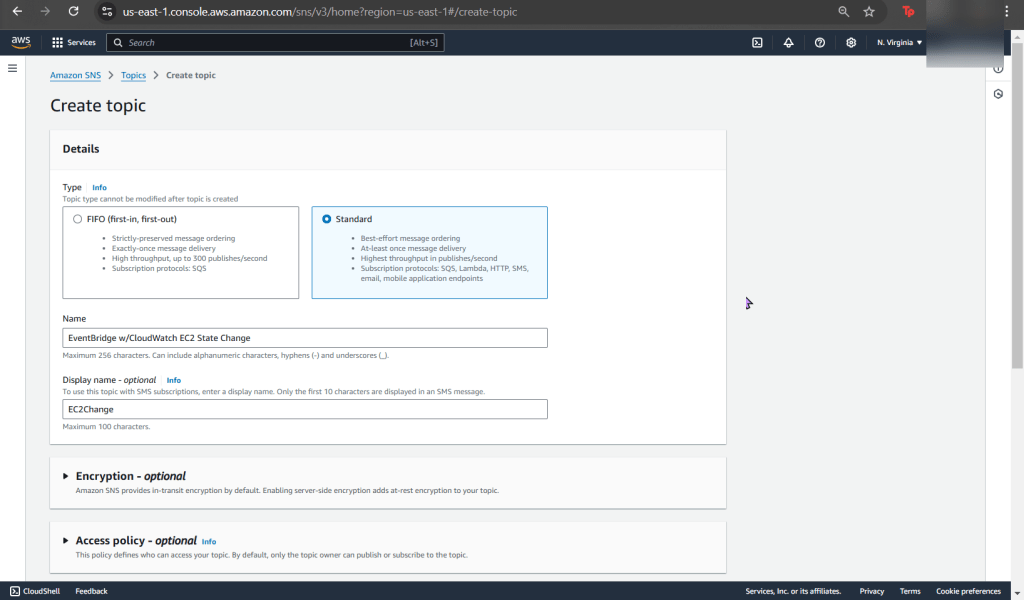

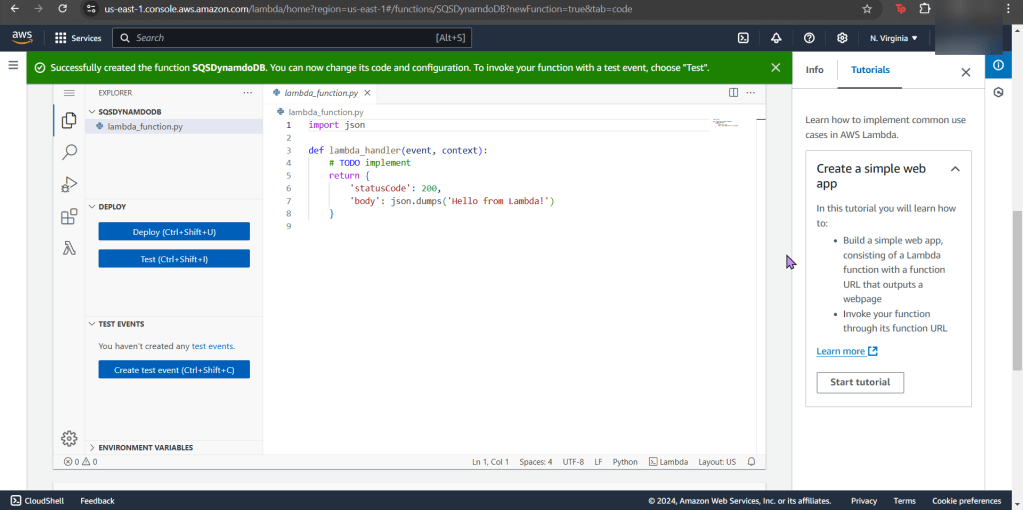

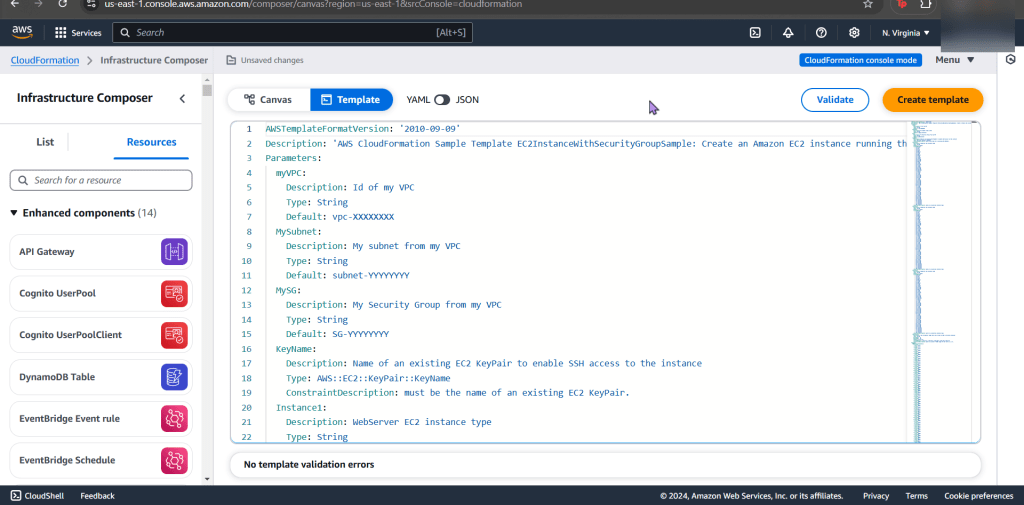

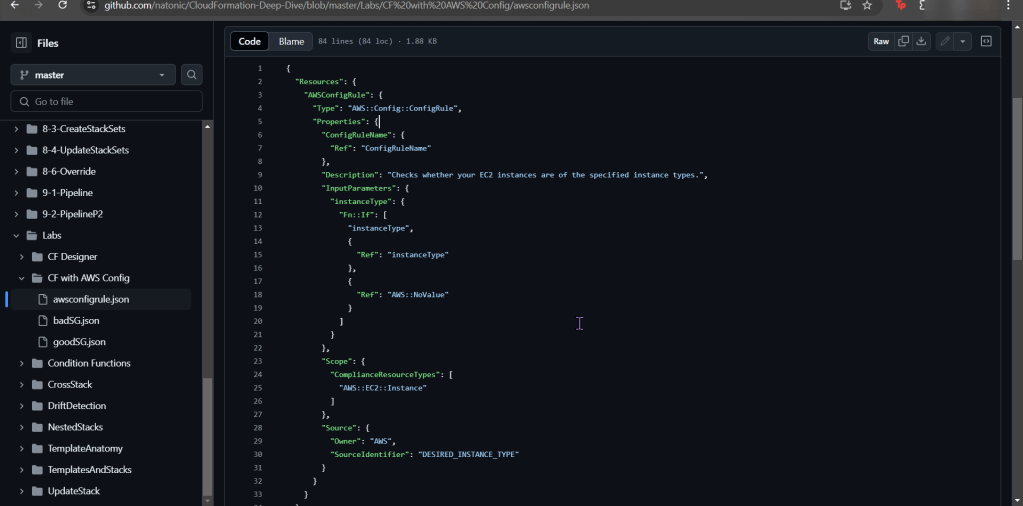

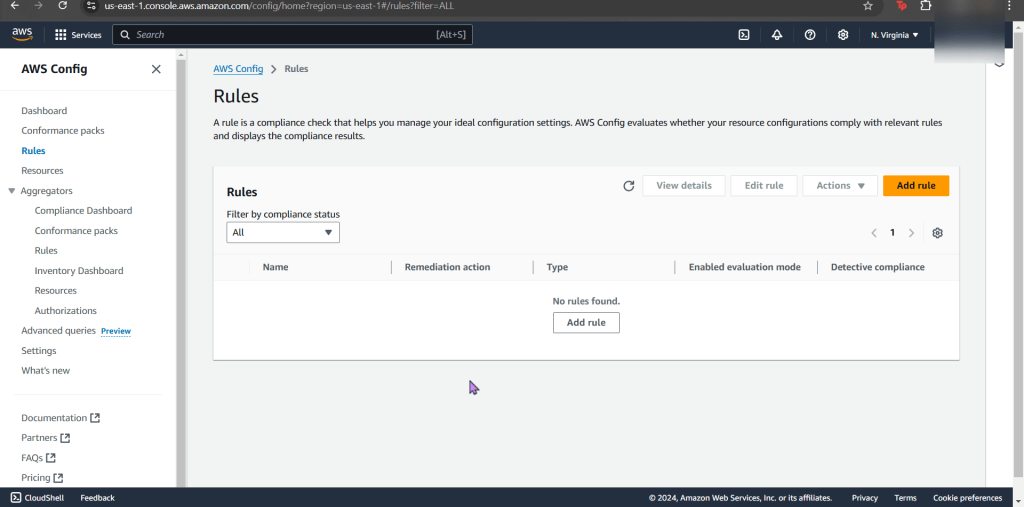

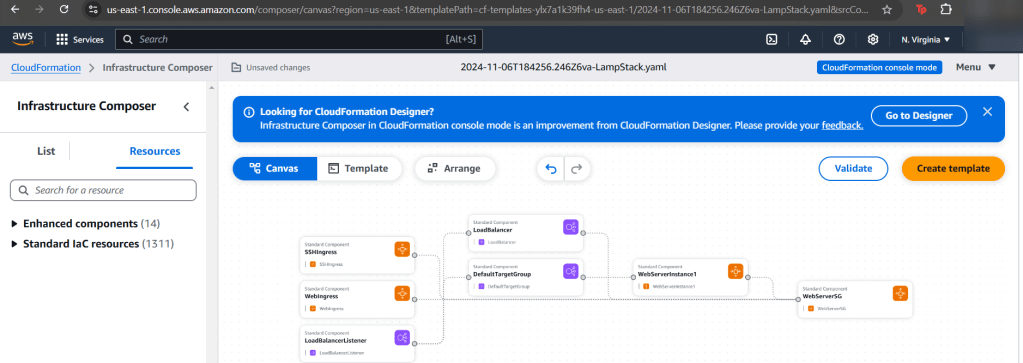

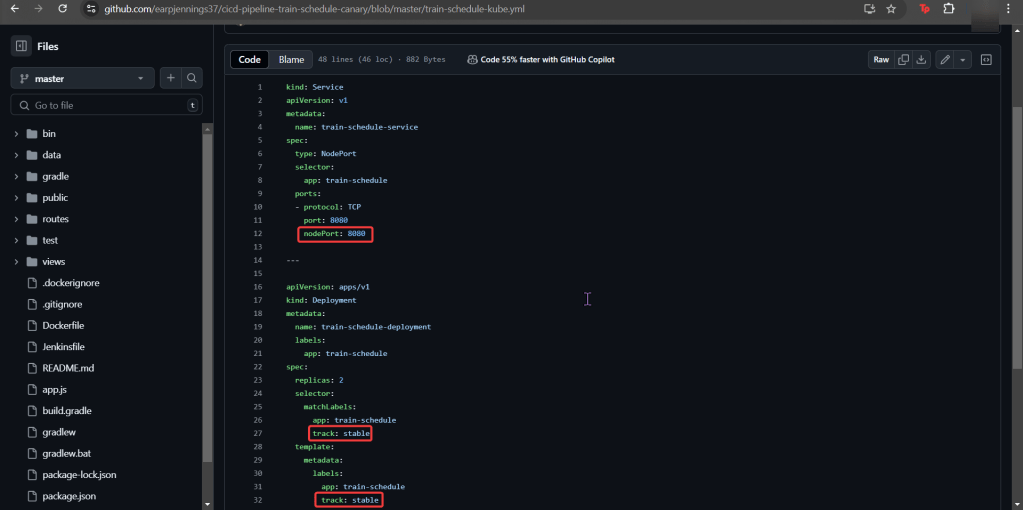

Canary Template:

- We have prod, but need Canary features for stages in our deployment!

- Pay Attention:

- track

- spec

- selector

- port

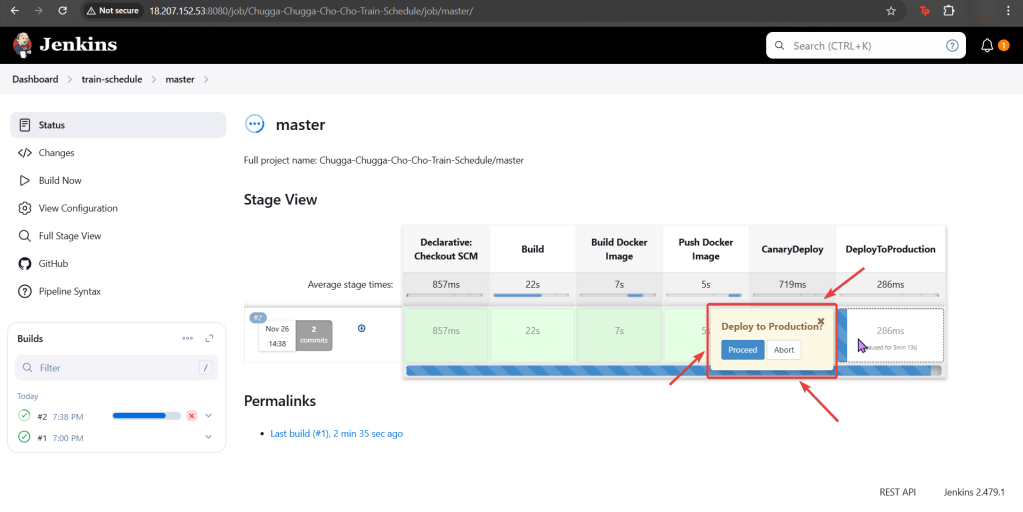

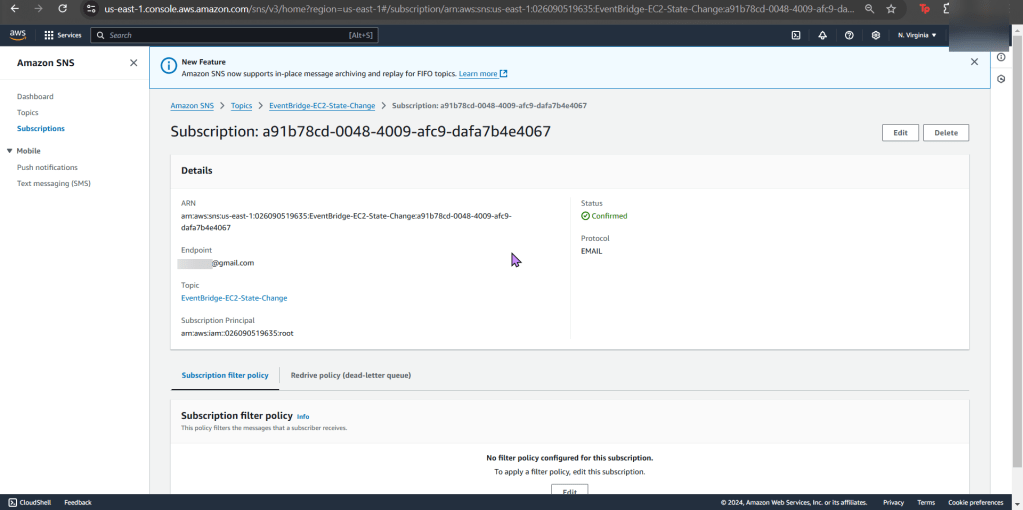

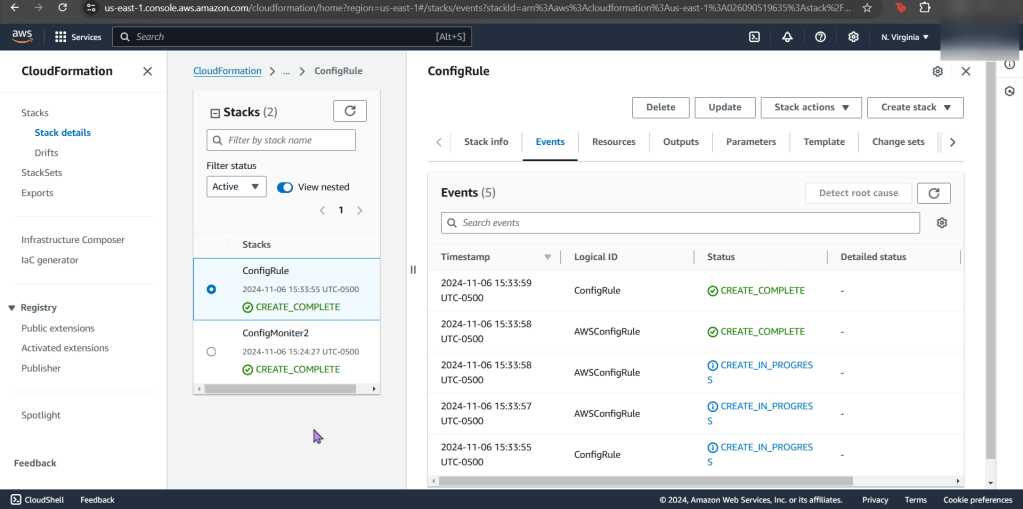

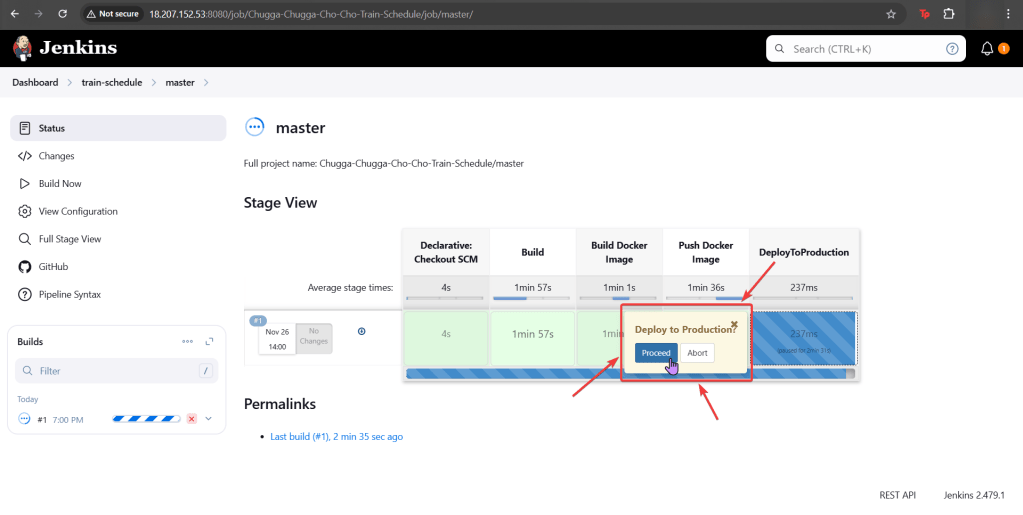

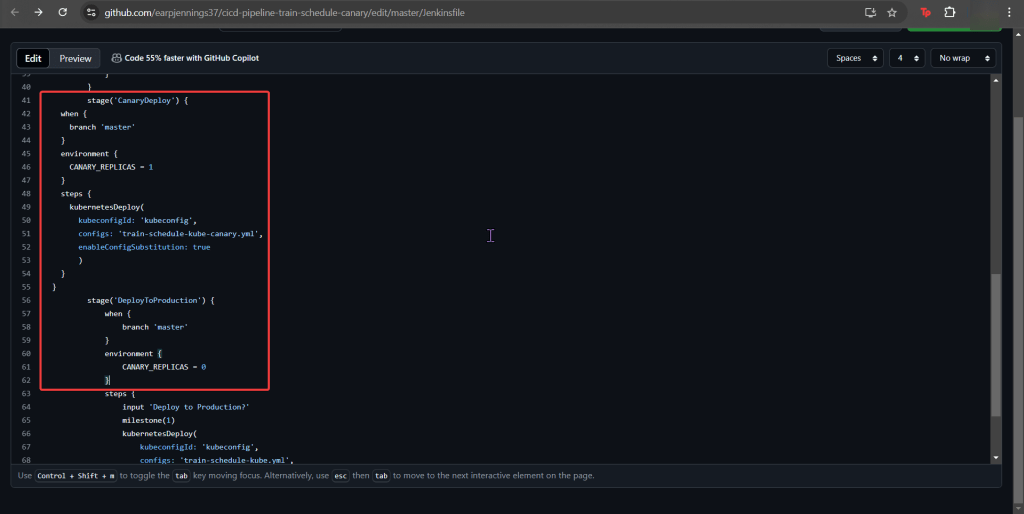

Add Jenkinsfile to Canary Stage:

- Between Docker Push & DeployToProduction

- We add CanaryDeployment stage!

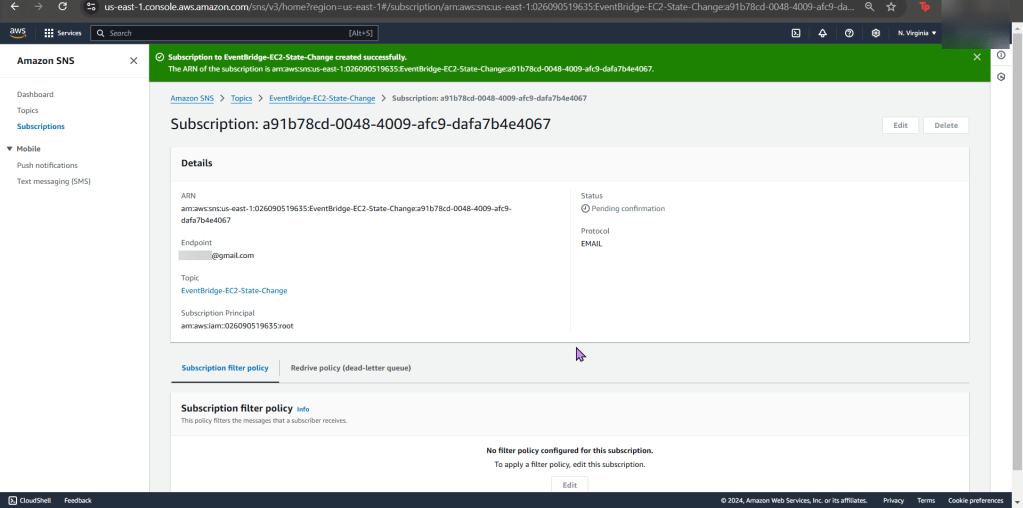

Modify Productions Deployment Stage:

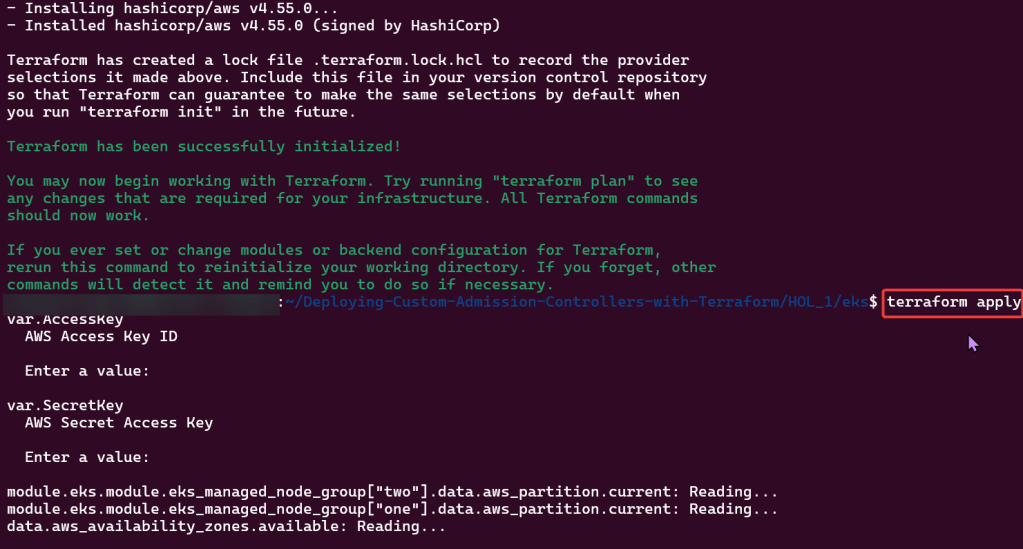

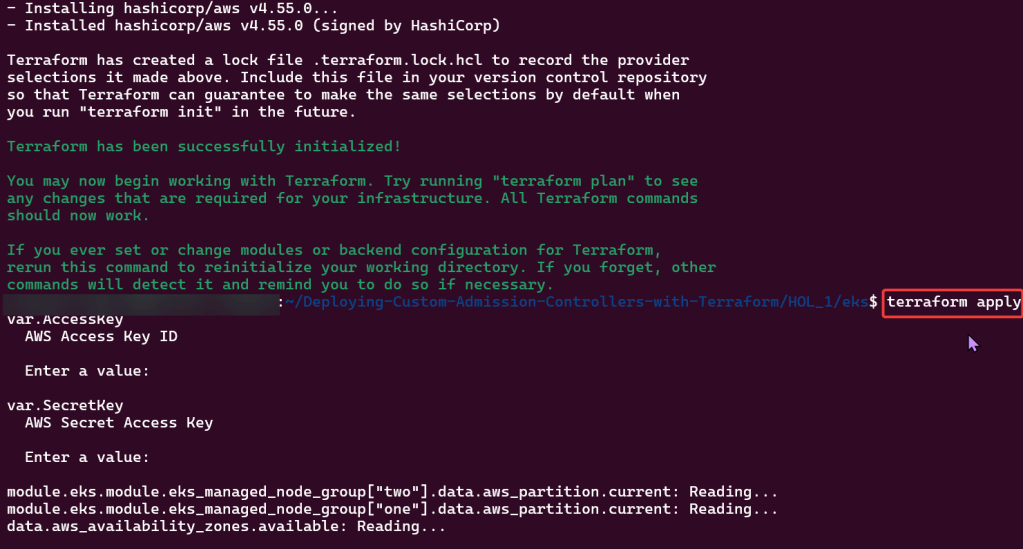

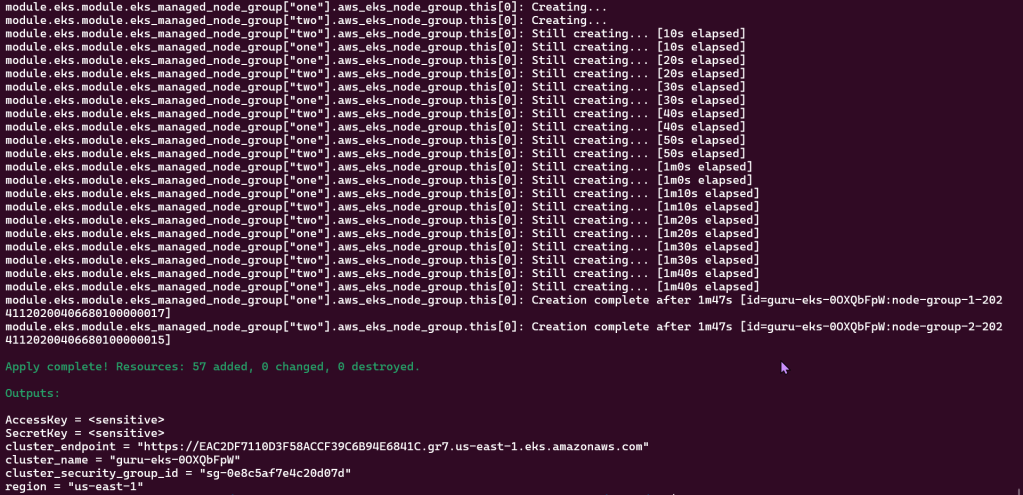

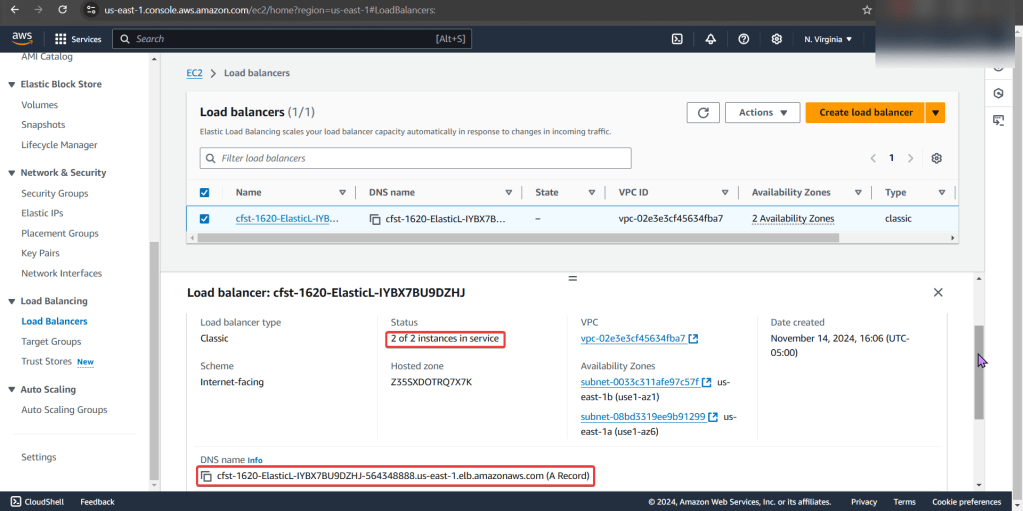

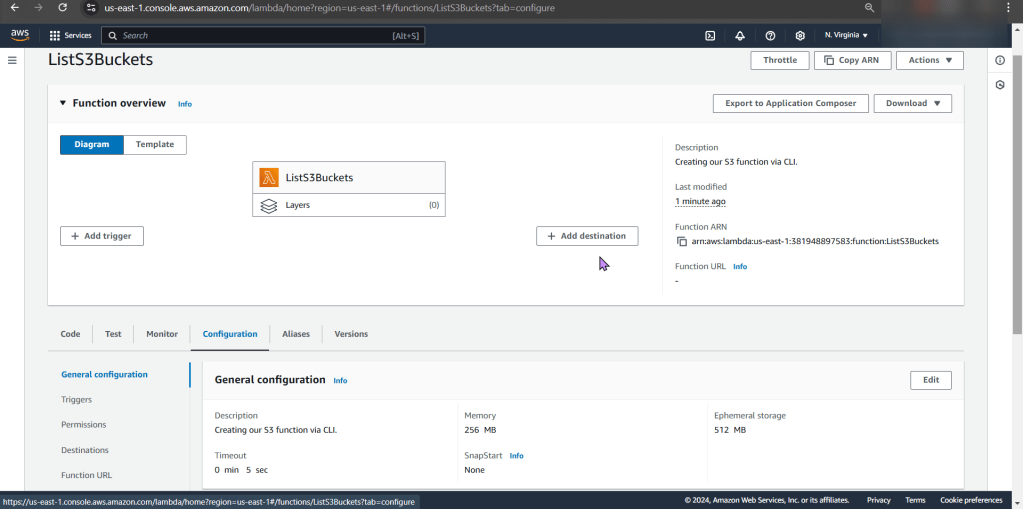

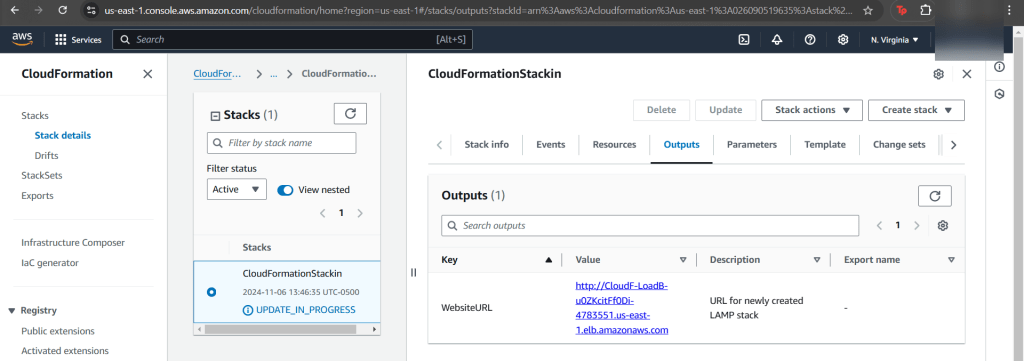

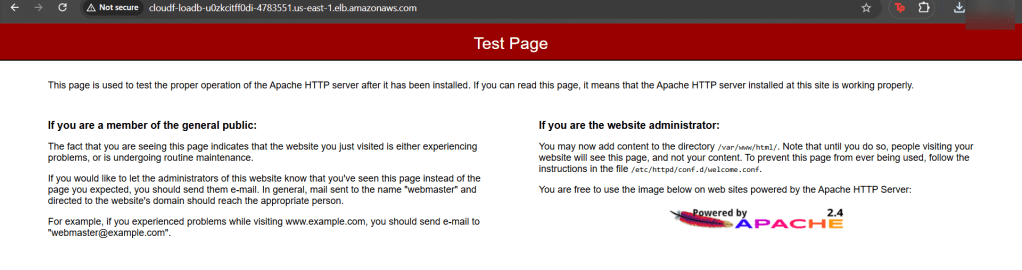

EXECUTE!!